| Home | Papers | Reports | Projects | Code Fragments | Dissertations | Presentations | Posters | Proposals | Lectures given | Course notes |

Development and Integration of Algorithms that Extract Emotion from MusicWerner Van Belle1* - werner@yellowcouch.org, werner.van.belle@gmail.com Abstract : The described project aims to link advanced audio signal processing techniques to empirical psychoacoustic testing in order to develop algorithms that can automatically retrieve (part of) the emotions humans associate with music. The project is set up as a cooperation between 3 partners: Norut IT, the Psychology department at the University of Tromsø and the music conservatory at Hoyskolen in Tromsø. Commercial relevance of the project is found in audio content extraction for databases and search engines, quantitative assessment of emotion in music for teaching, automatic creation of playlists, categorizing sound libraries and plugins for sound production software used in studios.

Keywords:

audio content extraction, psycho acoustics, BPM, tempo, rhythm, composition, echo, spectrum, sound color |

- 1 Research Problem

- 2 Background / State of the Art

- 3

Project Plan

- 4 Commercial Relevance

- 5 Budget

- 6 Ethics

- 7 Professional position and strategic importance of the project

1 Research Problem

Most of us feel that music is closely related to emotion or that music expresses emotions and/or can elicit emotional responses in the listeners. There is no doubt that music is a human universal. Every known human society is characterized by making music from their very beginnings. One fascinating possibility is that although different cultures may differ in how they make music and may have developed different instruments and vocal techniques, we may all perceive music in a very similar way. That is, there may be considerable agreement in what emotion we associate with a specific musical performance. [CFB04,DeB01,Jus01,CBB00,Mak00,SW99,Kiv89,Boz85,Cro84,MB82,Ber74,LvdGP66,Coo59]

The possible emotions contained within music are often assumed to be simple basic emotions. However, one might expect that combinations of primary emotions might lead to more complex emotional patterns [LeD96,OT90,Plu80]. What remains unclear is which underlying dimensions give structure and meaning to music. Thus one fundamental question for the present project is: how can we measure in a mathematical or computational way the emotional content in music ?

We propose here a computational method for establishing a similarity structure, based on various parameters, between musical pieces. Our main goal is to explore which ones of these parameters also account best for human judgments of different emotional content.

2 Background / State of the Art

From an engineering point of view, psychoacoustics has been very important in the design of compression algorithms such as MPEG . A large variety of work exists on psycho-acoustic 'masking'. Removing information that humans do not hear, improves the compression quality of the codec without audible content loss. Frequency masking, temporal masking and volume masking are all important tools for current day coders and decoders. Also, the application of correct filter-banks, respecting the sensitivity of human ears is another crucial factor. To this end the bark frequency scale is often used [Dim05,BBQ+96,HBEG95,HJ96,Hel72]. Notwithstanding the large variety and great quality of existing work on this matter, there is little relation between understanding what can be thrown away (masking) and what leads humans to associate specific feelings with music. In this proposal we aim to extract emotions associated with music, without wanting to compress the signal.

Current Dimensions

Musical emotions can be characterized very much the same way as the basic human emotions. The happy and sad emotional tones are among the most commonly reported in music and these basic emotions may be expressed, across musical styles and traditions, by similar structural features. Among these, pitch and rhythm seem basic features, which research on infants has shown to be structural features that we are able to perceive already early in life [HJ05]. Recently, it has been proposed that at least some portion of the emotional content of a musical piece is due to the close relationship between vocal expression of emotions (either as used in speech, e.g. a sad tone, or in non-verbal expressions, e.g., crying). In other words, the accuracy with which specific emotions can be communicated is dependent on specific patterns of acoustic cues [JL03]. This account can explain why music is considered expressive of certain emotions and it can also be related to evolutionary perspectives on the vocal expression of emotions. More specifically, some of the relevant acoustic cues that pertain to both domains (music and verbal communication, respectively) are: speech rate/tempo, voice intensity/sound level, and high-frequency energy). Speech rate/tempo may be most related to basic emotions as anger and happiness (when they increase) or sadness and tenderness (when they decrease). Similarly, the high-frequency energy also plays a role in anger and happiness (when it increases) and sadness and tenderness (when it decreases). Different combinations and/or levels of these basic acoustic cues could result in several specific emotions. For example, fear may be associated in speech or song with low voice intensity and little high-frequency energy, but panic expressed by increasing both intensity and energy.

Juslin & Laukka [JL03] have proposed the following dimensions of music (speech has also a correlate for each of these) as those that define the emotional structure and expression of music performance: Pitch or F0 (i.e., the lowest cycle component of a waveform) F0 contour or intonation, vibrato, intensity or loudness, attack or the rapidity of tone onset, tempo or the velocity of music, articulation or the proportion of sound-to-silence, timing or rhythm variation, timbre or high-frequency energy or an instrument/singers formant.

Additional Dimensions

Certainly, the above is not an exhaustive list of all the relevant dimensions of music or of the relevant dimensions of emotional content in music. Additional dimensions might include echo, harmonics, melody and low frequency oscillations. We briefly describe these additional dimensions

- Sound engineers recognize that echo, delay and spatial positioning [WB89,J.83,K.87,AJ96] influence the feeling of a production. Short echo of high frequencies and long delays makes the sound 'cold' (concrete wall room), while a short echo without delay, preserving the middle frequencies, makes the sound 'warm'. No echo makes the sound clinical and unnatural. Research into room acoustics [BK92,D.95,PM86,Y.85,LA62] show that digital techniques have great difficulties simulating the correct 'feeling' of natural rooms, illustrating the importance of time-correlation as a factor.

- 'Harmonics' refers to the interplay between a tone and integer multiples of that tone. A frequency together with all its harmonics forms the characteristic waveform of an instrument. Artists often select instruments based on the 'emotion' captured by an instrument. (e.g., clarinet typically plays 'funny' fragments while cello plays 'sad' fragments.

- Melody & pitch intervals. We believe that key, scale and chords also influence the feeling of a song. The terms 'major' and 'minor' chords already illustrate this, but also classical Indian musicology suggests this [OL03].

- Low frequency oscillations, as observed in electro-encefalograms, show information on the modus operandi of the brain. Delta-waves (below 4 Hz), theta-waves (4 to 8 Hz), alpha-waves (8 to 12 Hz) and beta-waves (13 to 30 Hz) all relate to some form of attention and focus. They also seem to relate to mood [AS85,HD90]. The brainwave pattern can synchronize to external cues under the form of video and audio [APR87,Ken88]. Interestingly, major and minor chords produce second order undertones at frequencies of respectively 10 Hz and 16 Hz, which places them in distinct brainwave scales. Rhythmical energy bursts also seem to influence the brainwave pattern (techno and 'trance' vs 'ambient'). We believe that both a quantification of the low frequency content and measurement of long period energy oscillations in songs can provide crucial input into our study[Mor92,BZ01].

BpmDj

|

|

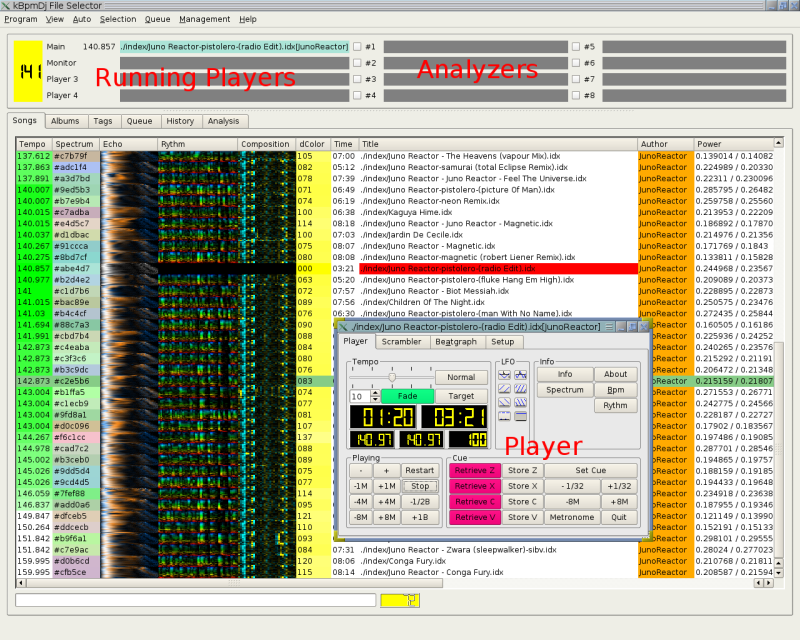

The open source software BpmDj [Bel05a] supports analyzing and storing of a large number of soundtracks. The program has been developed from 2000 until now under the form of a hobby project. It contains highly advanced algorithms to measure spectrum, tempo, rhythm, composition and echo characteristics.

Tempo module - Five different tempo measurement techniques are available of which autocorrelation [OS89] and ray-shooting [Bel04] are most appropriate. Other existing techniques include [YKT+95,UH03,SD98,GM97]. All analyzers in BpmDj make use of the Bark psychoacoustic [GEH98,KSP96,EH99] scale. The spectrum or sound color is visualized as a 3 channel color (red/green/blue) based on a Karhunen-Loéve transform [C.M95] of the available songs.

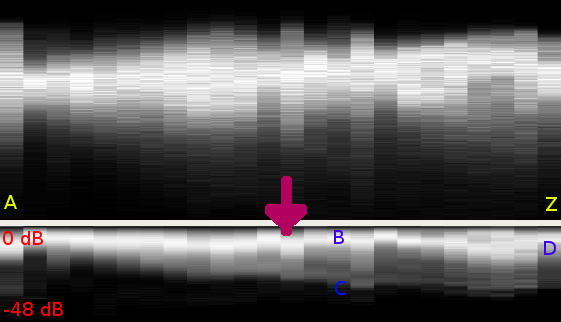

Echo/delay Modules - Measuring the echo characteristics is based on a distribution analysis of the frequency content of the music and then enhancing it using a differential autocorrelation [Bel05c]. Fig. 2 shows the echo characteristic of a song.

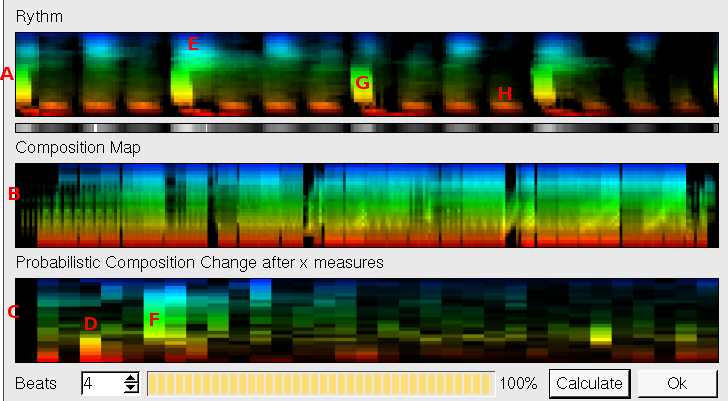

Rhythm/composition

modules - Rhythm and composition properties

rely on correct tempo information. Rhythm relies on cross correlation

and overlaying of all available measures. Composition relies on

measuring

the probability of a composition change after ![]() measures. Fig.

2 present a rhythm and composition

pattern.

measures. Fig.

2 present a rhythm and composition

pattern.

From an end-user point of view the program supports distributed analysis, automatic mixing of music, distance metrics for all analyzers as well as clustering and automatic classification based on this information. Everything is tied together in a Qt [htt] based user interface. Figure 1 shows a screenshot of BpmDj.

Despite its many uses BpmDj has one crucial problem: The distance metrics and the different weighing schemes are ad hoc tuned to some degree of satisfaction. In other words, it is very likely that the current weighing schemes and metrics are far from optimal and a large number of possible metrics are still lacking.

3 Project Plan

The proposed project will gather psychoacoustic information using test subjects and measure their emotional response to various sounds. The project will be conducted in three phases: i) initial screening, ii) acquisition of statistical information, iii) validation. In the first phase we screen different kinds of music and test their relevance to this study. This phase relies on open questionaries and free-form answers. In the second phase we use an engineered collection of songs1 and acquire statistical relevant information by using closed questions to the subject group. In the last phase, the validation phase, we realize a number of compositions based on the acquired know-how and test their expected impact on the subject group. This phase allows us to isolate the different parameters we suspect are responsible for emotional content.

In parallel with data acquisition will we further develop signal processing modules to measure specific song properties.

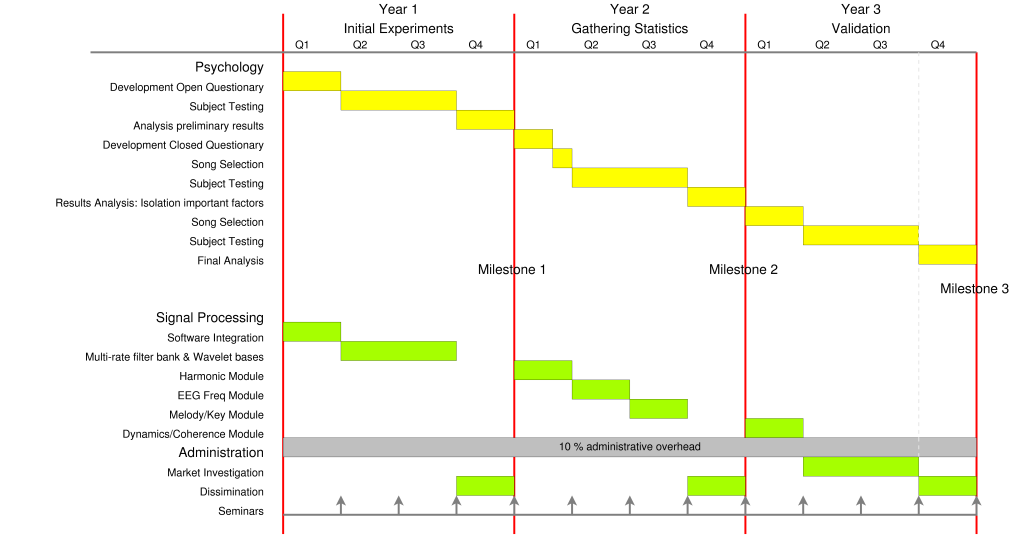

Milestones

Fig 3 presents the timeline of the project and

the different

work packages. The first milestone (![]() year) falls is after

setting up a testbed, presenting test subjects with the open

questionary

and analyzing the results. The second milestone (

year) falls is after

setting up a testbed, presenting test subjects with the open

questionary

and analyzing the results. The second milestone (![]() year) falls

after a thorough analysis of the involved parameters using a closed

questionary. The last milestone (end of the project) is the final

analysis of the validated results.

year) falls

after a thorough analysis of the involved parameters using a closed

questionary. The last milestone (end of the project) is the final

analysis of the validated results.

Psychophysics experiments.

The stimuli we will present the listeners will be short (a couple of measures) and will capture the song essence. All sounds will be presented to participants using stereo headphones. Participants will be asked to rate on a step-scale how well a particular emotion describes the music/sounds heard through the headphones. Responses and latency will be recorded with mouse clicks from participants on a screen display implemented in an extension of BpmDj. All experiments will use within-subjects designs (unless otherwise indicated). Analysis of variance will be used to test for the interaction between the factors in the experiments. Statistical tools as principal component analysis or cluster analysis will be applied to the data set obtained from the participants, so as to reveal the underlying structure or feature used by the participants in their attributions of emotional content to music.

Pupillometry

will be performed by means of the Remote Eye Tracking Device, R.E.D., built by SMI-SensoMotoric Instruments in Teltow (Germany). Analyzes of recordings will be computed by use of the iView software, also developed by SMI. The R.E.D. II can operate at a distance of 0.5-1-5 m and the recording eye-tracking sample rate is 50 Hz., with resolution better than 0.1 degree. The eye-tracking device operates by determining the positions of two elements of the eye: The pupil and the corneal reflection. The sensor is an infrared light sensitive video camera typically centered on the left eye of the subject. The coordinates of all the boundary points are fed to a computer that, in turn, determines the centroids of the two elements. The vectorial difference between the two centroids is the "raw" computed eye position. Pupil diameters are expressed in number of video-pixels of the horizontal and vertical diameter of the ellipsoid projected onto the video image by the eye pupil at every 20 ms sample

3.1 Task A: Data Acquisition

3.1.1 Phase 1: Initial Screening

In the initial screening phase we rely on an open questionary and a random selection of songs. In this phase we are interested to measure which songs are useful for further statistical analysis and which parameters should be taken into account. For every song we are interested in the 1) Reported emotion, 2) The strength of the reported emotion, 3) Why this emotion is present.

The following information is believed to be of importance and should be included into the questioning. a) Cultural Impact - what is the impact of nationality and culture on the reported emotion. b) Level of schooling - artist working with music might have a better feeling towards music than unschooled people c) Semantic Information - what is the impact of semantic information, such as recognizing the artist or knowing the song, or understanding the lyrics ? d) Emotional Reporting - how well are human subject able to report emotions ? Might they require training in advance ? What is the impact of their initial mood to the reports ? A report by [FMN+98] already demonstrated that extra emotional validation, such as done with pupilometry, is usually necessary. e) Memory - what is the impact of presentation sequence. Does information from one song carry over to the next ? Is there a lag effect in reported emotions ? Here we should also be interested in the experimentation time: is their a correlation between the accuracy of answers and the time the subject has been listening to songs ? f) Reporting Strategy - what is the relevance of 'emotion strength' to reported emotion. Does emotion strength limit the information we might obtain or not ? Likewise, what is the relevance of response-time. Is a short response time better suitable for consistent information, or is a long response time -allowing time to verbalize- more informative.

Results: The result of the initial screening will be i) a technical report covering our observations, ii) a report explaining the statistical process we will use for further analysis and iii) a selection of songs which we will use for further statistical analysis. The database we aim to create should avoid songs with an unclear signature.

Risks: The risk involving this preliminary screening is that the creation of a song database based

solely on consistency, might hide important information. If we only select songs of which the emotion is a synergetic effect of different parameters then we might loose the ability to distinguish those parameters properly. E.g; if a slowly played violin is combined with minor chords it might be recognized as 'sad'. By selecting only songs in which violins are in minor chords and played slowly we will not be able to make a distinction between the different parameters. Therefore it is important to consider the parameters we will measure afterwards and select a uniform distribution based on the 'why' response.

A second risk is that the open questionary will take a long time to answer and that to have some general understanding we need to screen a large amount of songs. To alleviate these problems we will rely on particular enthusiastic subjects.

3.1.2 Phase 2: Acquisition of Reliable Metrics

After initial screening we will design a set of experiments that will gather reliable information. Design of Experiments (DoE) allows one to vary all relevant factors systematically. When the results of the experiments are analyzed, they identify the factors that most influence the results, as well as details such as the existence of interactions and synergies between factors. Unscrambler [htt05b] will provide us with the ability to do this properly.

The design variables encompasses a set of measured song properties (see section 3.2). Tentatively, we believe echo, tempo, harmonics, key and long period oscillations (resembling EEG frequencies) will be a subset of the design variables. The response variables encompasses the emotional response, quantified using pupilometry. If appropriate, we might consider decomposing emotional responses into primary emotions [LeD96,OT90,Plu80]. The experiment itself requires the construction of a closest questionary (targeting the response variables) and a selection of songs (providing the design variables).

After performing the experiment, multivariate analysis will reveal the relation between the design variables and the response variables.

Result: The result of this work package is a well designed experiment, its results and analysis, answering in detail which signal factors provide emotional content and how those factors interrelate.

Risks: The design of experiment should be performed very carefully, taking into account all factors determined in the initial task. Especially testing sequence and subject endurance should be taken into account properly. It might turn out that during the design of the experiment, consecutive tests might provide more information, resulting in a changing work flow.

3.1.3 Phase 3: Validation

In the validation phase we will take the experimental design one step further. Instead of selecting appropriate songs, music will be created to test specific parameters. This is especially important for parameters that cannot be easily isolated using standard songs. Parameters of interest include a) dynamics, such as fortissimo, forte, mezzoforte, mezzopiano, piano and pianissimo, b) attack and sustain factors, such as tenuto, legato, staccato & marcato, c) microtonality to measure the impact of detuning, d) melodic tempo: largo, largetto, adagio, andante, moderato, allegro, presto, prestissimo. e) song waveforms can be altered relying on different instruments. f) Echo and delay characteristics can be influenced in the sound production phase. Further parameters of influence will be g) rhythm (time signatures, polyrythmical structures), h) key (major, minor & modal scales) and i) melody (ambitus and intervals). By creating a number of etudes, presented in different styles we will validate our research.

A final validation of the presented work will consist of the creation of a composition wandering through different emotions. These will range from primary emotions towards more complex and layered emotions. To this end, input will be given into Geir Davidsen current work on 'new playing techniques for brass'. The composition and etudes will be presented to a large audience and be part of the popular dissemination of the research results.

3.2 Task B:

Signal Processing Modules

Measuring subjective responses to music (Task A) provides only part of the information necessary to understand the relation between audio content and emotional perception.

Signal processing techniques, developed in this task, will enable us to link subjective perception to quantitative measured properties. BpmDj currently supports measurement of tempo, echo, rhythm and compositional information. We will extend BpmDj with new modules, able to capture information relevant to the emotional aspects of music. Below we describe the modules we will develop.

The key/scale module will measure the occurrence of chords by measuring individual notes. To provide information on the scale (equitemporal, pure major, pure minor, Arabic, Pythagorean, Werkmeister, Kirnberger, slendro, pelog and others) this module will also measure the tuning/detuning of the different notes.

The dynamic module will measure energy changes at different frequencies. First order energy changes provide attack and decay parameters of the song. Second order energy changes might provide information on song temperament.

The EEG module will measure low frequency oscillations between 0 and 30 Hz using a digital filter on the energy envelope of a song.

The harmonic module will inter-relate different frequencies in a song by investigating the probability that specific frequencies occur together. A Bayesian classification of the time based frequency and phase content will determine different classes. Every class will describe which attributes (frequencies and phases) belong together, thereby providing a characteristic sound or waveform of the music. This classification will allow us to correlate harmonic relations to the perception of music. Autoclass [SC95,CS96] will perform the Bayesian classification.

The melody module will rely on a similar technique by measuring relations between notes in time.

All of the above

modules need to decompose a song into its frequency

content. To this end, we will initially make use of a sliding window

Fourier transform [OS89].

Later in the project,

integration of a multi-rate filterbank

will achieve more accurate

decomposition. Existing filterbanks include

![]() octave

filter banks [J.

93] and the psycho-acoustic MP3 filterbank

[BBQ+96],

which will even allow us to work immediately with

MP3 frames. We will also investigate wavelet based filterbanks [Kai96]

because then we can experiment with different wavelet bases, accurately

capturing the required harmonics.

octave

filter banks [J.

93] and the psycho-acoustic MP3 filterbank

[BBQ+96],

which will even allow us to work immediately with

MP3 frames. We will also investigate wavelet based filterbanks [Kai96]

because then we can experiment with different wavelet bases, accurately

capturing the required harmonics.

Results: Different modules to quantify measures developed in work package 1. These include key/scale, dynamics, low frequency oscillation, harmonic and melody measures.

Risks: The preliminary analysis might indicate the need for different and/or extra signal processing modules. We believe that the two basic technologies supporting this work package (Bayesian classification and multi-rate signal decomposition) should make it easy to realize new modules quickly. A second risk lies in using the already available modules. E.g; the tempo characteristic returns one number, without measuring tempo-harmonics. Similarly, the echo characteristic might need revision to capture information obtained from the harmonic module.

3.3 Dissemination of Results

Publication

The research planned in the present proposal will primarily be of interest to the international research communities in cognitive science and computer science. Articles based on the research will be submitted to top-end journals in cognitive science, such as Cognitive Psychology, Cognition, or Cognitive Science. In addition, findings from this research will be presented at international conferences.

Artefacts

produced in this project will be open sourced in order to attract international attention from computer scientist working in the field of database meta-data extraction and content extraction.

Seminars

will be organized every 3 months. Both invited speakers and result dissemination will take place through these seminars. We hope to connect to researchers with similar interests through this open forum. A mailing list will be created for this particular purpose.

Concerts

The unique inter-disciplinary nature of this project and the highly interesting concept of 'emotion and music' allows us to present our findings to a large audience. To this end a concert-like approach will be used. In the past, this kind of science vulgarization has been very successful [Bel05b].

4 Commercial Relevance

Commercial relevance of this project can be found in many different areas and particular applications. The most outstanding is probably the possibility to search Internet based on similar audio fragments. One can think of a Google with an audio tab in which example song fragments can be put. This idea can further be extended into semantic peer to peer systems in which programs such as Napster, gnutella, Limewire and others cluster songs to machines of which the owner very probably will like the music. Clustering songs based on their emotional co-notations is also indispensable in database systems in order to speed up queries. Algorithms that recognize similar emotions are also a first step in data content extraction algorithms. Such algorithms might allow for automatic song meta-data generation. Another possibility is the classification of music libraries relying on the proposed methods. After song-clustering, the librarian can assign appropriate tags to every cluster. Radio stations and DJ's will be able to generate playlists that can do interesting things regarding the 'emotion' of a playlist. E.g: it might be possible to make a transition from sad to happy, from low-energy to high-energy and so on. Further commercial relevance can be found in plugins for existing software such as Cubase [htt05d], Cakewalk [htt05a], Protools [htt05c] and others.

Future prospects might include the possibility to quantitatively document compositions and songs in order to guide and teach students. Quantification of sound ambience might also be important for living standards (e.g: working environments, social places, living places, ...). A last future prospect can be found in automatic comparison analysis to check for plagiarism. Organizations such as Tono [htt05e] might be interested in this.

5 Budget

|

The budget associated with this research is presented in table 2. We request resources for 1 full time person at the psychology department and 1 full time person at Norut IT for signal processing and BpmDj software development. A 10% overhead for communication and administration is accounted. Travel costs are included in the budget.

6 Ethics

All participants in the experiments will participate on a voluntary basis and after written informed consent. They will be informed that they can interrupt the procedure at any time, without having to give a reason for it and at no costs for withdrawing. In none of the experiments will sensitive personal information or names or other characteristics that might identify the participant be recorded. All participants will be thoroughly debriefed after the experiment.

7 Professional position and strategic importance of the project

The computational processes underlying music and the emotions are a little investigated topic and interdisciplinary collaborations on this topic are rare. Hence, one way the present proposal is of relevance is the way it combines computational techniques with the empirical study of human responses in concert with explicit compositional methods and musicological structure.

The project is set up as a cooperation between 3 partners: Norut IT, which has an extensive history of signal processing in cooperation with NASA and ESA, the Psychology department at the University of Tromsø, which has previous work in the area of harmonic analysis and its correlation to mood, and the music conservatory at the Hoyskolen in Tromsø, which has the expertise in teaching emotional aspects of music. The music conservatory has also studios available to create musical prototypes which enables us to investigate specific phenomena.

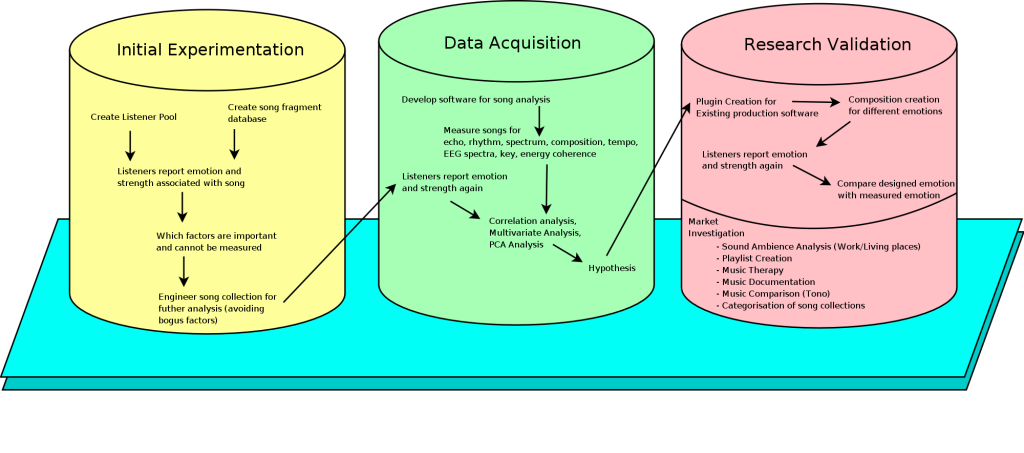

The particular set up of this project enables a knowledge transfer from basic research done at the university to end users working with music, as illustrated in figure 5.

|

Norut IT

(Norut Informasjonsteknologi as) is a Norwegian applied research institute in the field of information technology. The institute is a subsidiary of the Norut Group, which owns five main research institutes in Norway. The Norut Group has more than 250 employees. The head quarter is located in Tromsø, Norway. Norut IT employs 25 research scientists plus 2 administrative personnel. The 2002 annual turnover was 19 MNOK.

Norut IT its scientific background is rooted firmly in signal processing and mathematical modelling. This has been applied in the areas of Bioinformatics, earth observation (environment and resource monitoring based on satellite remote sensing) and advanced image analysis.

Dr. Werner Van Belle - originally from Belgium, now lives in Norway, where he works as a bioinformatics researcher at Norut IT. His research focuses around signal processing in life sciences. In his spare time he is passionate about digital signal processing for audio applications. He is inventor of an analysis technique for 2DE gel technology and co-inventor of a denoising technique for 2DE electrophoretic gel images. The Norwegian patent office recently reported back: 'the material is novel and has large scope'.

Psychology Department University Tromsø

Prof. Bruno Laeng - has a 100% research appointment in the biologisk psykologi division of the Department of Psychology. Recent quality evaluations from Norges Forksningsråd show that the division of biologisk psykologi at Universitetet i Tromsø (UiTø) has received one of the highest level of evaluation within UiTø from the examining committee (i.e., very good). Moreover, this applicants was awarded in the year 2000 the Pris til yngre forsker from UiTø.

Music Conservatorium Hoyskolen Tromsø

The northern Norwegian Music Conservatory was established in 1971 and became a state funded high-school in 1979. Since 1994 it became part of the 'department of arts' in the high-school in Tromsø. In 1985 the conservatory moved into a new building with its own concert hall, library (with reading and listening room) and sound studio, providing teaching and practicing space. The conservatory got clear responsibility from the department to educate music-artists and music-teachers for the Northern part of the country. In the last years, the student environment was enriched through close contact with music-education institutes in Northwest-Russia and Eastern Europa which lead to acceptance of students from Northwest-Russia, Hungary and Bulgary into the practicing education in Tromsø. Also, students from China belong to the current environment. Furthermore, there is an established international cooperation between NORDPLUS and ERASMUS which gives students and teachers the possibility to study and develop their skills with other Norwegian and European Conservatoria.

Geir Davidsen - an educated euphonium-player to the Music conservatory in Tromsø and to the Norwegian Music High School. In 1998, Geir became the first in the country who completed a practicing discipline in euphonium at the NMH with highest achievable grade. After finishing his studies he became highschool-lector at the music conservatory in Tromsø. With respect to the art itself, Geir has had a main project to learn new playing techniques for euphonium and worked together with a large variety of composers to write new compositions. From autumn 2005, Davidsen was accepted to conduct an artistic development work (comparable to an artists 'PhD'). His PhD in this project has the title: New playing techniques for brass, interaction between performer and composer. In this project, Geir will contribute with his particularly recognized knowledge of playing techniques and his empirical knowledge, will undoubtly be of great importance. Also his communication skills are of primordial value.

Prof. Ivar Frounberg researches composition and music technology at the Norwegian Music Highschool in Oslo. Advisor of Geir.

Prof. Niels Windfeld Lund is a professor in documentation-science at the University of Tromsø. Co-advisor of Geir.

Bibliography

- AJ96 - Yost William A. and Guzman Sandra J., Sound source processin: Is there echo in here ?, Current Directions in Psychological Sciences 5 (1996), no. 4, 125-131.

- APR87 - Yost William A., Harder P.J., and Dye R.H., Complex spectral patterns with interaural differences: Dichotic pitch and the central spectrum, Auditory Processing of Complex Sounds (Yost William A. and C.S.Watson, eds.), Lawrence Erlbaum, 1987.

- AS85 - G.L. Ahem and G.E. Schwartz, Differential lateralization for positive and negative emotion in the human brain: Eeg spectral analysis, Neuropsychologia (1985), no. 23, 745-756.

- BBQ+96 - M. Bosi, K. Brandenburg, Sch. Quackenbush, L.Fielder, K.Akagiri, H. Fuchs, M. Dietz, J. Herre, G. Davidson, and Yoshiaki Oikawa, Iso/iec mpeg-2 advanced audio coding, Proc. of the 101st AES-Convention, preprint 4382 (1996).

- Bel04 - Werner Van Belle, Bpm measurement of digital audio by means of beat graphs & ray shooting, Tech. report, Department Computer Science, University i Tromsø, Malmvegen 206, 9022 Krokelvdalen, April 2004.

- Bel05a - --- Bpmdj: Free dj tools for linux, http://bpmdj.sourceforge.net/ (1999-2005).

- Bel05b - --- Advanced signal processing techniques in bpmdj, Side Program of the Northern Norwegian Insomia Festival, Tvibit, http://bio6.itek.norut.no/werner/Papers/insomnia05/BpmDj[Insomnia-25-08-05].html, August 2005.

- Bel05c - --- Observations on spectrum and spectrum histograms in bpmdj, Online publications, Malmvegen 206, 9022 Krokelvdalen, Norway; Online at http://bio6.itek.norut.no/papers/; (2005).

- Ber74 - --- D.E. Berlyne, The new experimental aesthetics, New York: Halsted (1974), 27-90.

- BK92 - Bank and Knud, The application of digital signal processing to large-scale simulation of room acoustics: Frequence response modelling and optimization software for a multichannel dsp engine, Journal of the Audio Engineering Society 40 (1992), 260-276.

- Boz85 - P. Bozzi, Semantica dei bicordi., La psicologica della musica in Europa e in Italia. Bologna: CLUEB (G. Stefani and F. Ferrari, eds.), 1985.

- BZ01 - Anne J. Blood and Robert J. Zatorre, Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion, PNAS 98 (2001), no. 20, 11818-11823.

- CBB00 - M. Costa, P.E. Ricci Bitti, and F.L. Bonfiglioli, Psychological connotations of harmonic intervals, Psychology of Music 28 (2000), 4-22.

- CFB04 - M. Costa, P. Fine, and P.E. Ricci Bitti, Interval distributions, mode, and tonal strength of melodies as predictors of perceived emotion, Music Perception 22 (2004), no. 1.

- C.M95 - C.M.Bishop, Neural networks for pattern recognition, Oxford University Press, 1995.

- Coo59 - D. Cooke, The language of music, London: Oxford University Press, 1959.

- Cro84 - R.G. Crowder, Perception of the major/minor distinction: I. historical and theoretical foundations, Psychomusicology 4 (1984), 3-12.

- CS96 - P. Cheeseman and J. Stutz, Advances in knowledge discovery and data mining, ch. Bayesian Classification (AutoClass): Theory and Results, AAAI Press/MIT Press, 1996.

- D.95 - Botteldooren D., Finite-difference time-domain simulation of low-frequency room acoustic problems, The Journal of the Acoustical Society of America 98 (1995), 3302-3308.

- DeB01 - M. DeBellis, The routledge companion to aesthetics, London: Routledge, 2001.

- Dim05 - Ivan Dimkovic, Improved ISO AAC Coder, Tech. report, PsyTEL Research, Belgrade, Yugoslavia, 2005.

- EH99 - E.Zwicker and H.Fastl, Psychoacoustics - facts and models, 2nd edition ed., Springer-Verlag, 1999.

- FMN+98 - Tiffany Field, Alex Martinez, Thomas Nawrocki, Jeffrey Pickens, Nathan A. Fox, and Saul Schanberg, Music shifts frontal eeg in depressed adolescents - electroencephalography, Adolescence, Spring (1998).

- GEH98 - T. Gulzow, A. Engelsberg, and U. Heute, Comparison of a discrete wavelet transformation and a nonuniform polyphase filterbank applied to spectral-subtraction speech enhancement, Signal Processing 64 (1998), no. 1, 5-19.

- GM97 - M. Goto and Y. Muraoka, Music understanding at the beat level: Real-time beat tracking for audio signals, Readings in Computational Auditory Scene Analysis (1997).

- HBEG95 - J. Herre, K. Brandenburg, E. Eberlein, and B. Grill, Second generation iso/mpeg-audio layer iii coding, 98th AES Convention, Paris, preprint 3939 (1995).

- HD90 - J.B. Henriques and R.J. Davidson, Regional brain electrical asymmetries discriminate between previously depressed and healthy control subjects, Journal of Abnormal Psychology 29 (1990), 22-31.

- Hel72 - R.P. Hellman, Asymmetry of masking between noise and tone, Perception and Psycho-acoustics 11 (1972), no. 3, 241-246.

- HJ96 - J. Herre and J.D. Johnston, Enhancing the performance of perceptual audio coders by using temporal noise shaping (tns), 101st AES Convention, Los Angeles, 1996.

- HJ05 - E.E. Hannon and S.P. Johnson, Infants use meter to categorize rhythms and melodies: Implicatiopns for musical structure learning, Cognitive Psychology 50 (2005), 354-377.

- htt - http://www.trolltech.com/products/qt/index.html, The qt product by trolltech.

- htt05a - http://www.cakewalk.com/, Cakewalk, Twelve Tone Systems (2005).

- htt05b - http://www.camo.com/, Unscrambler, CAMO (2005).

- htt05c - http://www.digidesign.com/, Pro tools, Digidesign (2005).

- htt05d - http://www.steinberg.de/, Cubase, Steinberg (2005).

- htt05e - http://www.tono.no, Tono, Galleriet, Tøyenbekken 21, Postboks 9171, Grønland, N-0134 Oslo, Norway - Tlf.: (+47) 22 05 72 00 - Fax: (+47) 22 05 72 50 (1928-2005).

- J. 93 - J. Kovacevic and M. Vetterli, Perfect reconstruction filter banks with rational sampling factors, IEEE Transactions on Signal Processing 41 (1993), no. 6.

- J.83 - Blauert J., Spatial hearing, Cambridge MA MIT Press, 1983.

- JL03 - P.N. Juslin and P. Laukka, Communication of emotions in vocal expression and music performance: Different channels, same code ?, Psychological Bulletin 129 (2003), 770-814.

- Jus01 - P.N. Juslin, Music and emotion: Theory and research, ch. Communication emotion in music performance: A review and theoretical framework, pp. 309-337, Oxford: Oxford University Press, 2001.

- K.87 - Clifton R. K., Breakdown of echo suppression in the precedence effect, Journal of the Acoustical Society of America 82 (1987), no. 5, 1834-1835.

- Kai96 - Gerald Kaiser, A friendly guide to wavelets, 6th edition ed., Birkhäuser, 1996.

- Ken88 - P. Kenealy, Validation of a music mood induction procedure: Some preliminary findings, Cognition and Emotion 2 (1988), 11-18.

- Kiv89 - P. Kivy, Sound sentiment, Tech. report, Philadelphia: Temple University Press, 1989.

- KSP96 - M. Kappelan, B. Strauß, and P.Vary, Flexible nonuniform filter banks using allpass transformation of multiple order, Proceedings 8th European Signal Processing Conference (EUSIPCO'96), 1996, pp. 1745-1748.

- LA62 - Kinster L.E and Frey A.R., Fundamentals of acoustics, 2nd ed., John Wiley & Sons; New York, 1962.

- LeD96 - Joseph LeDoux, The emotional brain: The mysterious underpinnings of emotional life, Toughstone: Simon & Schuster, 1996.

- LvdGP66 - W.J.M Levelt, J.P. van de Geer, and R. Plomp, Triadic comparison of musical intervals., British Journal of Mathematical and Statistical Psychology 19 (1966), 163-179.

- Mak00 - S. Makeig, Music, mind, and brain, ch. Affective versus analytic perception of musical intervals., pp. 227-250, New York; London: Plenum Press, 2000.

- MB82 - T.F. Maher and D.E. Berlyne, Verbal and explanatory responses to melodic musical intervals, Psychology of Music 10 (1982), no. 1, 11-27.

- Mor92 - G.C. Mornhinweg, Effects of music preference and selection on stress reduction., Journal of Holistic Nursing 10 (1992), 101-109.

- OL03 - H. Oelmann and B. Laeng, The emotional meaning of muscial intervals: A validation of an ancient indian music theory ?, Poster presented at XIII Conference of the ESCOP, Granada (2003).

- OS89 - Alan V. Oppenheim and Ronald W. Schafer, Discrete-time signal processing, Prentice Hall, 1989.

- OT90 - A. Ortony and T.J. Turner, What's basic about basic emotions?, Psychological Review 97 (1990), 315-331.

- Plu80 - R. Plutchik, Emotion: Theory, research, and experience: Vol 1. theories of emotion, ch. A general psychoevolutionary theory of emotion, pp. 3-33, New York: Academic, 1980.

- PM86 - Peterson and Patrick M., Simulating the response of multiple microphones to a single acoustic source in a reverberant room, The Journal of the Acoustical Society of America 80 (1986), 1527-1529.

- SC95 - J. Stutz and P. Cheeseman, Maximum entropy and bayesian methods, cambridge 1994, ch. AutoClass - a Bayesian Approach to Classification, Kluwer Acedemic Publishers, Dordrecht, 1995.

- SD98 - Scheirer and Eric. D, Tempoi and beat analysis of acoustic musical signals, Journal of the Acoustical Society of America 1 (1998), no. 103, 588.

- SW99 - L.D Smith and R.N. Williams, Children's artistic responses to musical intervals., American Journal of Psychology 112 (1999), no. 3, 383-410.

- UH03 - Christian Uhle and Juergen Herre, Estimation of tempo, micro time and time signature from percussive music, Proceedings of the 6th International Conference on Digital Audio Effects (DAFX-03), London, UK (2003).

- WB89 - Hartmann W.M. and Rakerd B, Localization of sound in rooms iv: The franssen effect, Journal of the Acoustical Society of America 86 (1989), no. 4, 1366-1373.

- Y.85 - Ando Y., Concert hall acoustics, Springer-Verlag, 1985.

- YKT+95 - Yamada, Kimura, Tomohiko, Funada, Takeaki, Inoshita, and Gen, Apparatus for detecting the number of beats, US Patent Nr 5,614,687 (1995).

Footnotes

- ... songs1

- With songs we refer to the popular notion of 'song', i.e., a produced and recorded composition with or without text, performed using one or many instruments. Typically around 3 to 4 minutes, but this is no strict requirement. If we refer to the 'emotion' contained within a song we refer to the most recognizable/accessible measures in the song (a fragment below 30 seconds).

| http://werner.yellowcouch.org/ werner@yellowcouch.org |  |