| Home | Papers | Reports | Projects | Code Fragments | Dissertations | Presentations | Posters | Proposals | Lectures given | Course notes |

Designing Music Therapy: Developing Algorithms to Extract Emotion from MusicWerner Van Belle1* - werner@yellowcouch.org, werner.van.belle@gmail.com Abstract : The effect of music as a psychotherapeutic tool has been recognized for a long time. Alone, or in combination with classical treatment, music can alleviate depression, stress and anxiety, as well as acute and chronic pain. Such beneficial effects are likely to derive from its ability to induce mood changes. However, it remains unclear which aspects of music can cause emotional changes. This project aims to link advanced audio signal processing techniques to empirical psychoacoustic testing to develop algorithms that automatically retrieve emotions associated with a particular piece of music. Such algorithms could then be used to select and develop musical pieces for therapeutic purposes.

Keywords:

audio content extraction, psycho acoustics, BPM, tempo, rhythm, composition, echo, spectrum, sound color, music therapy, psychoacoustics |

1 Background

Major depressive disorder and dysthymic disorder are two costly diseases for society. Epidemiological studies show that, in modern societies and in any given year, 5 to 10% adults may suffer from a severe unipolar pattern of depression, while 2.5 to 5.4% would suffer from a less disabling dysthymic disorder [Com99]. It is estimated that clinical depression is experienced by 50% of all people who suffered a stroke, 17% of those who have had a heart attack, 30% of cancer patients and between 9 and 27% of people with diabetes [Sim96].

Another dramatic health problem are neuro-degenerative diseases among the elderly population. Interventions that could delay disease onset even modestly can have a major public health impact [ASK+05]. Thus, depression and dementia constitute two of the most significant mental health issues for nursing home residents. [SSRB03]

Music is not and could not be the cure to the above disorders but it does have the ability to influence the central nervous system and, specifically, it can be used as a mood induction procedure. Indeed, music is often used as an independent therapeutic nursing intervention, and it is has been used in the psychiatric setting, for the 'treatment' of chemical dependency and in intensive care units of hospitals. Clearly, it can have a soothing and relaxing effect and can enhance well-being by reducing anxiety, enhancing sleep, and by distracting a patient from agitation, aggression, and depression states. These positive aspects of music have led to the use of 'music therapy' as an aid in the everyday care of patients in, for example, nursing homes [Cur86,SZ89]. Compared to other forms of patients care, the costs are low, there are no clear negative side effects, and music can help in improving the level of patient's satisfaction. Furthermore music can be easily applied to the care of many people, since its use is not constrained to a specific environment and circumstances and can be used within the hospital as at home. Music has also been shown to improve the symptoms in neuro-degenerative diseases and may contribute in palliative care [Mys05,GTYG01]. Similarly, patients' reports of chronic pains can also be reduced through appropriate music.

Music appears to elicit emotional responses in the listeners. A survey [Tha95] of more than 300 participants ranked listening to music as the second best method to get out of a bad mood (i.e., feelings of tension or low energy). This power of emotion to both stir and quite emotions is clearly also at the base of its use as a therapeutic tool, which in combination with actual medical treatment might synergistically enhance the effect of the whole treatment [Cur86,SZ89].

Some researchers argue that music works by conditioned response , but attributing its effects only to a learning context may be incorrect since music is a human universal and every known human society is characterized by making music from their very beginnings. Although different cultures may differ in how they make music and may have developed different instruments and vocal techniques, they all seem to perceive music in a very similar way. That is, there may be considerable agreement in what emotions people associate with a specific musical performance [CFB04,DeB01,Jus01,CBB00,Mak00,SW99,Kiv89,Boz85,Cro84,MB82,Ber74,LvdGP66,Coo59]. In general, there seems to be very little research on which aspects of music induce a specific emotion and which underlying musical dimensions give music its structure and meaning.

2 Goal

This research tries to understand which aspects of music induce specific emotions and which underlying musical dimensions provide structure and meaning to the listener. We are specifically concerned with measuring in a mathematical way the basic emotional content in music. Empirical participant testing will associate sound fragments with emotions while various combinations of signal processing techniques will provide automated sound analysis. Multivariate statistical methods will measure the applicability of the various signal processing techniques.

The possible emotions contained within music are often assumed to be simple basic emotions. Once we can find those back, one might expect that combinations of primary emotions might lead to more complex emotional patterns [LeD96,OT90,Plu80].

3 State of the art

3.1 Music as a way to alleviate clinical symptoms

Music therapy is believed to help alleviate many different symptoms. Among those depression, anxiety, insomnia and chronic pain.

Depression - Depression and dementia remain two of the most significant mental health issues for nursing home residents [SSRB03]. There is now a growing interest in the therapeutic use of music in nursing homes. A widely shared conclusion is that music can supplement medical treatment and has a clear potential for improving nursing homes' care. Music also seems to improve major depression [JF99].

Pregnancy - Experiments on the effects of music on intrusive and withdrawn mothers show a decrease in cortisol levels of the intrusive mothers. Concomitantly, their state anxiety inventory levels decreased, while the profile of mood states (POMS) depressed mood levels decreased significantly [TFHR+03].

Anxiety - It would seem that, in general, affective processes are critical to understanding and promoting lasting therapeutic change. Results by [KWM01] indicate that music-assisted reframing is more efficacious than typical reframing interventions in reducing anxiety. One study of patients with animal phobias showed that when patients were exposed (from a distance) to the animals, those who simultaneously listened to music they preferred got over their phobias more readily than the group treated in the silence condition [ea88].

Insomnia - Music improves sleep quality in older adults. Using the Pittsburgh Sleep Quality Index and Epworth Sleepiness Scale [LG05] showed that music significantly improves sleep quality.

Pain reduction - Music therapy seems an efficient treatment for different forms of chronic pain, including fibromyalgia, myofascial pain syndromes, polyarthritis [MBH97]; chronic headaches [RSV01] and chronic low back pain [GCP+05]. Music seems to affect especially the communicative and emotional dimension of chronic pain [MBH97]. Sound induced trance also enables patients to distract them from their condition and it may result in pain relief 6-12 month later [RSV01].

The general impact of music on the nervous system extends to the immune system. Research by [HO03] indicates that listening to music after a stressful task increases norepinephrine levels. This is in agreement with [BBF+01], who verified the immunological impact of drum circles. Drum circles have been part of healing rituals in many cultures throughout the world since antiquity. Composite drumming directs the immune system away from classical stress and results in increased dehydroepiandrosterone-to-cortisol ratios, natural killer cell activity and lymphokine-activated killer cell activity without alteration in plasma interleukin 2 or interferon-gamma. One area of application for these effects could be cancer treatment. Autologous stem cell transplantation, a common treatment for hematologic malignancies, causes significant psychological distress due to its effect on the immune system. A study by [CVM03] reveals that music therapy reduces mood disturbance in such patients. The fact that music can be used as a mood induction procedure, with the required physiological effects can make its use relevant for pharmaceutical companies [RA04]. Positive benefits of music therapy have also been observed in Multiple Sclerosis patients, which is an auto-immune disease [SA04]. As a last note, many researchers observed the cumulative effect of music therapy [HL04,LG05].

3.2 Psycho-Acoustics of music

From an engineering point of view, psychoacoustics has been very important in the design of compression algorithms such as MPEG [BBQ+96,HBEG95]. A large variety of work exists on psycho-acoustic 'masking'. Removing information that humans do not hear, improves the compression quality of the codec without audible content loss [HJ96,Hel72]. Frequency masking, temporal masking and volume masking are all important tools for current day coders and decoders [Dim05]. Also, the application of correct filter-banks, respecting the sensitivity of human ears is another crucial factor. Notwithstanding the large variety and great quality of existing work on this matter, there is little relation between understanding what can be thrown away (masking) and what, when kept, leads humans to associate specific feelings with music. In this proposal we aim to extract emotions associated with music, without a need to compress the signal.

3.3 Current Psychoacoustic Dimensions

Musical emotions can be characterized very much the same way as the basic human emotions. The happy and sad emotional tones are among the most commonly reported in music and these basic emotions may be expressed, across musical styles and traditions, by similar structural features. Among these, pitch and rhythm seem basic features, which research on infants has shown to be structural features that we are able to perceive already early in life [HJ05]. Recently, it has been proposed that at least some portion of the emotional content of a musical piece is due to the close relationship between vocal expression of emotions (either as used in speech, e.g. a sad tone, or in non-verbal expressions, e.g., crying). In other words, the accuracy with which specific emotions can be communicated is dependent on specific patterns of acoustic cues [JL03]. This account can explain why music is considered expressive of certain emotions and it can also be related to evolutionary perspectives on the vocal expression of emotions. More specifically, some of the relevant acoustic cues that pertain to both domains (music and verbal communication, respectively) are: speech rate/tempo, voice intensity/sound level, and high-frequency energy). Speech rate/tempo may be most related to basic emotions as anger and happiness (when they increase) or sadness and tenderness (when they decrease). Similarly, the high-frequency energy also plays a role in anger and happiness (when it increases) and sadness and tenderness (when it decreases). Different combinations and/or levels of these basic acoustic cues could result in several specific emotions. For example, fear may be associated in speech or song with low voice intensity and little high-frequency energy, but panic expressed by increasing both intensity and energy.

Juslin & Laukka [JL03] have proposed the following dimensions of music (speech has also a correlate for each of these) as those that define the emotional structure and expression of music performance: Pitch or F0 (i.e., the lowest cycle component of a waveform) F0 contour or intonation, vibrato, intensity or loudness, attack or the rapidity of tone onset, tempo or the velocity of music, articulation or the proportion of sound-to-silence, timing or rhythm variation, timbre or high-frequency energy or an instrument/singers formant.

3.4 Additional Psychoacoustic Dimensions

Certainly, the above is not an exhaustive list of all the relevant dimensions of music or of the relevant dimensions of emotional content in music. Additional dimensions might include echo, harmonics, melody and low frequency oscillations. We briefly describe these additional dimensions

Sound engineers recognize that echo, delay and spatial positioning [WB89,J.83,K.87,AJ96] influence the feeling of a sound production. Short echo of high frequencies and long delays makes the sound 'cold' (e.g., concrete wall room), while a short echo without delay, preserving the middle frequencies, makes the sound 'warm'. No echo makes the sound clinical and unnatural. Research into room acoustics [BK92,D.95,PM86,Y.85,LA62] show that digital techniques have great difficulties simulating the correct 'feeling' of natural rooms, illustrating the importance of time-correlation as a factor.

Harmonics refers to the interplay between a tone and integer multiples of that tone. A note's frequency together with all its harmonics forms the characteristic waveform of an instrument. Artists often select instruments based on the 'emotion' captured by an instrument. (e.g., clarinet typically plays 'funny' fragments while cello plays 'sad' fragments.

Melody & pitch intervals. Key, scale and chords could also influence the feeling of a song. The terms 'major' and 'minor' chords already illustrate this, but also classical Indian musicology suggests this [OL03].

4 Planning

The proposed project will gather psychoacoustic information using human participants in an experimental laboratory setting where their emotional response to various sounds will be measured. The project relies on three tasks. i) initial screening, ii) a designed experiment and iii) development of signal processing modules. In the first phase we screen different kinds of music and test their relevance to this study. This phase relies on open questionnaires and free-form answers. In the second phase we use an engineered collection of songs and acquire statistical relevant information by using closed questions to the participant group. In parallel with data acquisition we will further develop signal processing modules that measure specific song properties.

Finally, we will measure the participants' physiological reactions by the method of pupillometry. Given that the pupil of the eye is also controlled by the autonomic system [Loe93], then monitoring changes of pupil diameter can provide a window onto the emotional state of an individual. Previous research has established that variations in pupil size occur in response of stimuli of interest to the individual [Dab97,HP60], emotional states [KB66,LF96,PS03], as well as increased cognitive load [KB66,KB67,Pra70,HP63] or 'cognitive dissonance' [PL05]. Mudd, Conway, and Schindler [MCS90] already used pupillometry to index listeners' aesthetic appreciations of music.

4.1 Material & Methods

4.1.1 Psychophysics experiments.

The stimuli presented to the listeners (N=200) will be short (a couple of measures) and capture the song essence. All sounds will be presented to participants using stereo headphones. Participants will be asked to rate on a 7-step-scale how well a particular emotion describes the music/sounds heard through the headphones. Responses and latency will be recorded with mouse clicks from participants on a screen display implemented in an extension of BpmDj. All experiments will use within-participants designs (unless otherwise indicated). Analysis of variance will be used to test for the interaction between factors in the experiments. Statistical tools as principal component analysis or cluster analysis will be applied to the data set obtained from the participants, so as to reveal the underlying structure or feature used by the participants in their attributions of emotional content to music.

4.1.2 Pupillometry

will be performed by means of the Remote Eye Tracking Device, R.E.D., built by SMI-SensoMotoric Instruments in Teltow (Germany). Analyzes of recordings will be computed by use of the iView software, also developed by SMI. The R.E.D. II can operate at a distance of 0.5-1-5 m and the recording eye-tracking sample rate is 50 Hz., with resolution better than 0.1 degree. The eye-tracking device operates by determining the positions of two elements of the eye: The pupil and the corneal reflection. The sensor is an infrared light sensitive video camera typically centered on the left eye of the participant. The coordinates of all the boundary points are fed to a computer that, in turn, determines the centroids of the two elements. The vectorial difference between the two centroids is the "raw" computed eye position. Pupil diameters are expressed in number of video-pixels of the horizontal and vertical diameter of the ellipsoid projected onto the video image by the eye pupil at every 20 ms sample.

|

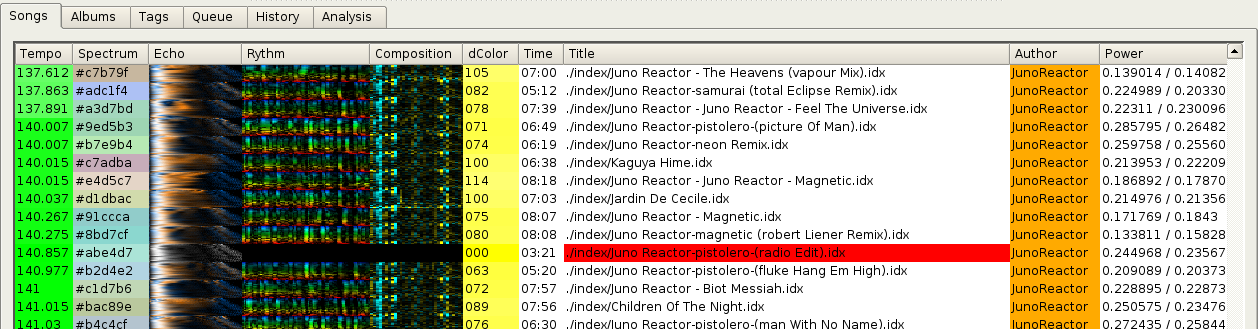

4.1.3 Software: BpmDj Music Analysis

The open source software BpmDj [Bel05a] (Figure 2) analyzes and stores of a large number of soundtracks. Werner Van Belle developed the program from 2000 until now under the form of a hobby project. It contains advanced algorithms to measure spectrum, tempo, rhythm, composition and echo characteristics.

Tempo module - Five different tempo measurement techniques are available of which autocorrelation [OS89] and ray-shooting [Bel04] are most appropriate. Other existing techniques include [YKT+95,UH03,SD98,GM97]. All analyzers in BpmDj make use of the Bark psychoacoustic [GEH98,KSP96,EH99] scale. The spectrum or sound color is visualized as a 3 channel color (red/green/blue) based on a Karhunen-Loéve transform [C.M95] of the available songs.

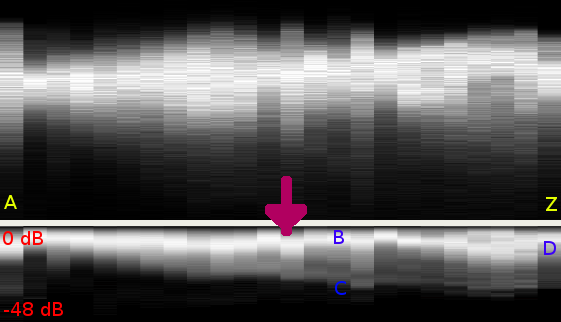

Echo/delay Modules - Measuring the echo characteristics is based on a distribution analysis of the frequency content of the music and then enhancing it using a differential autocorrelation [Bel05d]. Fig. 3 shows the echo characteristic of a song.

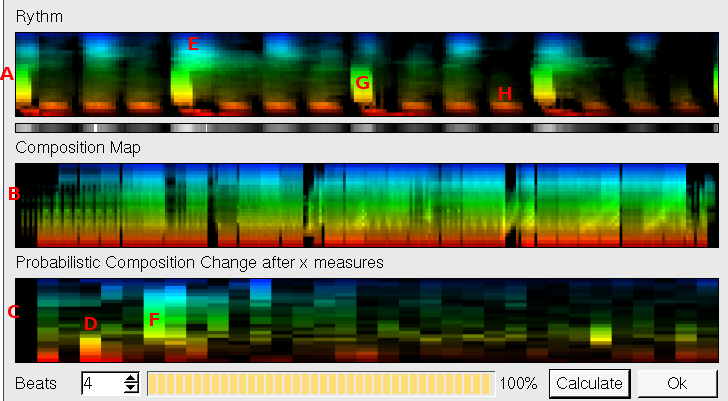

Rhythm/composition

modules - Rhythm and composition properties

rely on correct tempo information. Rhythm relies on cross correlation

and overlaying of all available measures. Composition relies on

measuring

the probability of a composition change after ![]() measures. Fig.

3 present a rhythm and composition

pattern.

measures. Fig.

3 present a rhythm and composition

pattern.

From an end-user point of view the program supports distributed analysis, automatic mixing of music, distance metrics for all analyzers as well as clustering and automatic classification based on this information. Everything is tied together in a Qt [htt] based user interface. BpmDj will be used as a basic platform in which new modules will be plugged in.

4.2 Initial Screening

Not only can music elicit emotional response, many observations indicate that ones mental state influences music preference and thus symptomatically reveals mental aspects of the listener. [LW96,BGL02,PBP04,San04]. This observation will be used in the initial screening phase during which we will ask participants to rate music they are presented with as well as list music they prefer. This in combination with an analysis of the participants mental state will provide input into an experimental design (section 4.3).

In the initial screening phase we rely upon an open questionnaire and a random selection of songs. We are interested to learn which songs are useful for further statistical analysis and explore the parameters that should be taken into account. For every song we are interested in the 1) reported emotion, 2) the strength of the reported emotion, 3) why the participant perceives a specific emotion.

The following information is currently believed to be important. a) Level of schooling - artist working with music might have a better feeling towards music than unschooled people. b) Semantic Information - what is the impact of semantic information, such as recognizing the artist or song ? c) Emotional Reporting - how well are human participants able to report emotions ? What is the impact of their initial mood to the reports [FMN+98] ? Does emotion strength limit the information we might obtain or not ? What is the relevance of response-time. Is a short response time better suitable for consistent information, or is a long response time -allowing time to verbalize- more informative. d) Memory - what is the impact of presentation sequence. Does information from one song carry over to the next ? Is there a lag effect in reported emotions ? Exists a correlation between the accuracy of answers and the time the participant has been listening to songs ?

We will rely on normal participants (equally distributed male/female) drawn from the student population. Before and after the test participants will be asked to list a number of their favorite songs and assess their mental state using a merge of different questionaries, including, but not necessary limited to the Pittsburgh sleep quality index, the Epworth sleepiness scale, the beck depression inventory II, the beck anxiety inventory, profile of mood states [MLD71], state anxiety inventory [KBR98] and Plutchik's emotions profile index [Plu74].

The result of the initial screening will consist of a) a list of songs related to specific emotions and b) a list of suspected parameters for emotional content retrieval.

|

4.3 Designed

Experiment

After initial screening we will design a set of experiments to gather reliable information. Design of Experiments allows one to vary all relevant factors systematically. When the results of the experiments are analyzed, they identify the factors that most influence the results, as well as details such as the existence of interactions and synergies between factors [Box54,Gir92].

The design variables encompasses a set of measured song properties (see section 4.4). Tentatively, we believe echo, tempo, harmonics and key measures will be a subset of the design variables. The response variables encompasses the emotional response, quantified using pupillometry. If appropriate, we might consider decomposing emotional responses into primary emotions [LeD96,OT90,Plu80]. The experiment itself requires the construction of a closed questionnaire (targeting the response variables) and a selection of songs (providing the design variables).

After performing the experiment, multivariate analysis will reveal the relation between the design variables and the response variables. The design of experiment should be performed very carefully, taking into account all factors determined in the initial task. Especially testing sequence and participant endurance should be accounted for.

4.4 Signal

Processing Modules

Measuring subjective responses to music provides only part of the information necessary to understand the relation between audio content and emotional perception.

Signal processing techniques, developed in this task, will enable us to link subjective perception to quantitative measured properties. BpmDj currently supports measurement of tempo, echo, rhythm and compositional information. We will extend BpmDj with new modules, able to capture information relevant to the emotional aspects of music. Below we describe the modules we will develop.

The key/scale module will measure the occurrence of chords by measuring individual notes. To provide information on the scale (equitemporal, pure major, pure minor, Arabic, Pythagorean, Werkmeister, Kirnberger, slendro, pelog and others) this module will also measure the tuning/detuning of the different notes. The dynamic module will measure energy changes at different frequencies. First order energy changes provide attack and decay parameters of the song. Second order energy changes might provide information on song temperament. The harmonic module will inter-relate different frequencies in a song by investigating the probability that specific frequencies occur together. A Bayesian classification of the time based frequency and phase content will determine different classes. Every class will describe which attributes (frequencies and phases) belong together, thereby providing a characteristic sound or waveform of the music. This classification will allow us to correlate harmonic relations to the perception of music. Autoclass [SC95,CS96] will perform the Bayesian classification. The melody module will rely on a similar technique by measuring relations between notes in time.

All of the above

modules need to decompose a song into its frequency

content. To this end, we will initially make use of a sliding window

Fourier transform [OS89].

Later in the project,

integration of a multi-rate filterbank

will achieve more accurate

decomposition. Existing filterbanks include

![]() octave

filter banks [J. 93]

and the psycho-acoustic MP3 filterbank

[BBQ+96],

which will even allow us to work immediately with

MP3 frames. We will also investigate wavelet based filterbanks [Kai96]

because then we can experiment with different wavelet bases, accurately

capturing the required harmonics. The preliminary analysis might

indicate

the need for different and/or extra signal processing modules. We

believe that the two basic technologies supporting this work package

(Bayesian classification and multi-rate signal decomposition) should

make it easy to realize new modules quickly.

octave

filter banks [J. 93]

and the psycho-acoustic MP3 filterbank

[BBQ+96],

which will even allow us to work immediately with

MP3 frames. We will also investigate wavelet based filterbanks [Kai96]

because then we can experiment with different wavelet bases, accurately

capturing the required harmonics. The preliminary analysis might

indicate

the need for different and/or extra signal processing modules. We

believe that the two basic technologies supporting this work package

(Bayesian classification and multi-rate signal decomposition) should

make it easy to realize new modules quickly.

5 Strategic Importance

The strategic relevance of this proposal to the HELSEOMSORG program can be found in its ability to improve the design of musical therapy. If a strategic use can alleviate symptoms of pain, depression, instability, anxiety and other symptoms, this will increase the quality of life for many people in nursing homes, in psychiatric institutions and the elderly confined at home. Understanding which musical aspects lead to an emotional response might lead to creation of efficient playlists and a more scientific way of assessing and selecting songs. Depending on the results of the presented work we might be able to present recommendations to different patients on what kind of music might be suitable to them. Creation of typical 'likes-a-lot' and 'should-listen-to' playlists per emotional state might enhance the psychotherapists toolbox. The presented research might also give input into the required ambience in hospitals in areas for birth-giving and palliative areas.

6 Dissemination of Results

Publication - The research will primarily be of interest to the international research communities in cognitive science, computer science and nursing. Articles based on the proposed ground research will be submitted to top-end journals in cognitive science, such as Music Perception. In addition, findings from this research will be presented at international conferences.

Artefacts - produced in this project will be open sourced in order to attract international attention from computer scientist working in the field of content extraction. BpmDj and Cryosleep are both online and with over respectively 390 and 688 unique monthly downloads, excluding search robots, we are able to reach many researchers at low cost.

7 Ethics

All participants in the experiments will participate on a voluntary basis and after written informed consent. They will be informed that they can interrupt the procedure at any time, without having to give a reason for it and at no costs for withdrawing. In none of the experiments will sensitive personal information or names or other characteristics that might identify the participant be recorded. All participants will be thoroughly debriefed after the experiment.

8 Professional position

The research program itself is multidisciplinary. It include psychology, statistics, computer science and signal processing. The computational processes underlying music and the emotions are a little investigated topic and interdisciplinary collaborations on this topic are rare.

Prof. Bruno Laeng - has a 100% academic position (50% research appointment) in the biologisk psykologi division of the Department of Psychology. Recent quality evaluations from Norges Forksningsråd show that the division of biologisk psykologi at Universitetet i Tromsø (UiTø) has received one of the highest level of evaluation within UiTø from the examining committee (i.e., very good). Moreover, this applicants was awarded in the year 2000 the Pris til yngre forsker from UiTø.

Dr. Werner Van Belle - currently works at Norut IT. In his spare time he is passionate about digital signal processing for audio applications. Of particular relevance for this proposal is his work on mood induction [Bel05b] and sound analysis [Bel05a,Bel05c,Bel05d,Bel04].

The research is supported by 'het Weyerke', a Belgian service center/nursing home for mentally handicapped and elderly. They are mainly interested in music as a stimulation and soothing mechanism to alleviate stress and depressive symptoms from dementing elderly. Their long standing tradition in this matter will provide input into our study. Furthermore, the presented international cooperation might allow the exchange of key scientists and know how.

Bibliography

- AJ96 - Yost William A. and Guzman Sandra J., Sound source processin: Is there echo in here ?, Current Directions in Psychological Sciences 5 (1996), no. 4, 125-131.

- ASK+05 - D. Aldridge, W. Schmid, M. Kaeder, C. Schmidt, and T. Ostermann, Functionality or aesthetics? a pilot study of music therapy in the treatment of multiple sclerosis patients., Complement Ther Med. 3 (2005), no. 1, 25-33.

- BBF+01 - B.B. Bittman, L.S. Berk, D.L Felten, J. Westengard, O.C. Simonton, J. Pappas, and M. Ninehouser, Composite effects of group drumming music therapy on modulation of neuroendocrine-immune parameters in normal subjects, Altern Ther Health Med 7 (2001), no. 1, 38-47.

- BBQ+96 - M. Bosi, K. Brandenburg, Sch. Quackenbush, L.Fielder, K.Akagiri, H. Fuchs, M. Dietz, J. Herre, G. Davidson, and Yoshiaki Oikawa, Iso/iec mpeg-2 advanced audio coding, Proc. of the 101st AES-Convention, preprint 4382 (1996).

- Bel04 - Werner Van Belle, Bpm measurement of digital audio by means of beat graphs & ray shooting, Tech. report, Department Computer Science, University i Tromsø, Malmvegen 206, 9022 Krokelvdalen, April 2004.

- Bel05a - --- Bpmdj: Free dj tools for linux, http://bpmdj.sourceforge.net/ (1999-2005).

- Bel05b - --- The cryosleep brainwave generator, http://bio6.itek.norut.no/cryosleep/ or http://werner.onlinux.be/cryosleep/ (2003-2005).

- Bel05c - --- Advanced signal processing techniques in bpmdj, Side Program of the Northern Norwegian Insomia Festival, Tvibit, http://bio6.itek.norut.no/werner/Papers/insomnia05/BpmDj[Insomnia-25-08-05].html, August 2005.

- Bel05d - --- Observations on spectrum and spectrum histograms in bpmdj, Online publications, Malmvegen 206, 9022 Krokelvdalen, Norway; Online at http://bio6.itek.norut.no/papers/; (2005).

- Ber74 - D.E. Berlyne, The new experimental aesthetics, New York: Halsted (1974), 27-90.

- BGL02 - M. Burge, C. Goldblat, and D. Lester, Music preferences and suicidality: a comment on stack., Death Stud. 26 (2002), no. 6, 501-504.

- BK92 - Bank and Knud, The application of digital signal processing to large-scale simulation of room acoustics: Frequence response modelling and optimization software for a multichannel dsp engine, Journal of the Audio Engineering Society 40 (1992), 260-276.

- Box54 - G.E.P. Box, Some theorems on quadratic forms applied in the study of analysis of variance problems., Annals of Statistics 25 (1954), 290-302.

- Boz85 - P. Bozzi, Semantica dei bicordi., La psicologica della musica in Europa e in Italia. Bologna: CLUEB (G. Stefani and F. Ferrari, eds.), 1985.

- CBB00 - M. Costa, P.E. Ricci Bitti, and F.L. Bonfiglioli, Psychological connotations of harmonic intervals, Psychology of Music 28 (2000), 4-22.

- CFB04 - M. Costa, P. Fine, and P.E. Ricci Bitti, Interval distributions, mode, and tonal strength of melodies as predictors of perceived emotion, Music Perception 22 (2004), no. 1.

- C.M95 - C.M.Bishop, Neural networks for pattern recognition, Oxford University Press, 1995.

- Com99 - Ronald J. Comer, Fundamentals of abnormal psychology,, second edition ed., Worth-Freeman, 1999.

- Coo59 - D. Cooke, The language of music, London: Oxford University Press, 1959.

- Cro84 - R.G. Crowder, Perception of the major/minor distinction: I. historical and theoretical foundations, Psychomusicology 4 (1984), 3-12.

- CS96 - P. Cheeseman and J. Stutz, Advances in knowledge discovery and data mining, ch. Bayesian Classification (AutoClass): Theory and Results, AAAI Press/MIT Press, 1996.

- Cur86 - S. L. Curtis, The effect of music on pain relief and relaxation of the terminally ill, Journal of Music Therapy 23 (1986), no. 1, 10-24.

- CVM03 - B.R. Cassileth, A.J. Vickers, and L.A. Magill, Music therapy for mood disturbance during hospitalization for autologous stem cell transplantation: a randomized controlled trial., Cancer 98 (2003), no. 12, 2723-2729.

- D.95 - Botteldooren D., Finite-difference time-domain simulation of low-frequency room acoustic problems, The Journal of the Acoustical Society of America 98 (1995), 3302-3308.

- Dab97 - J.M. Dabbs, Testosterone and pupillary response to auditory sexual stimuli., Physiology and Behavior 62 (1997), 909-912.

- DeB01 - M. DeBellis, The routledge companion to aesthetics, London: Routledge, 2001.

- Dim05 - Ivan Dimkovic, Improved ISO AAC Coder, Tech. report, PsyTEL Research, Belgrade, Yugoslavia, 2005.

- ea88 - G. H. Eifert et al., Affect modification through evaluative conditioning with music, Behavior Research and Therapy 26 (1988), no. 4, 321-330.

- EH99 - E.Zwicker and H.Fastl, Psychoacoustics - facts and models, 2nd edition ed., Springer-Verlag, 1999.

- FMN+98 - Tiffany Field, Alex Martinez, Thomas Nawrocki, Jeffrey Pickens, Nathan A. Fox, and Saul Schanberg, Music shifts frontal eeg in depressed adolescents - electroencephalography, Adolescence, Spring (1998).

- GCP+05 - S. Guetin, E. Coudeyre, M.C. Picot, P. Ginies, B. Graber-Duvernay, D. Ratsimba, W. Vanbiervliet, J.P. Blayac, and C. Herisson, Effect of music therapy among hospitalized patients with chronic low back pain: a controlled, randomized trial, Ann Readapt Med Phys. 48 (2005), no. 5, 217-224.

- GEH98 - T. Gulzow, A. Engelsberg, and U. Heute, Comparison of a discrete wavelet transformation and a nonuniform polyphase filterbank applied to spectral-subtraction speech enhancement, Signal Processing 64 (1998), no. 1, 5-19.

- Gir92 - Ellen R. Girden, Anova repeated measures, Thousand Oaks, CA: Sage Publications, 1992.

- GM97 - M. Goto and Y. Muraoka, Music understanding at the beat level: Real-time beat tracking for audio signals, Readings in Computational Auditory Scene Analysis (1997).

- GTYG01 - D. Gagner-Tjellesen, E.E. Yurkovich, and M. Gragert, Use of music therapy and other itnis in acute care, J Psychosoc Nurs Ment Health Serv 39 (2001), no. 10, 26-37.

- HBEG95 - J. Herre, K. Brandenburg, E. Eberlein, and B. Grill, Second generation iso/mpeg-audio layer iii coding, 98th AES Convention, Paris, preprint 3939 (1995).

- Hel72 - R.P. Hellman, Asymmetry of masking between noise and tone, Perception and Psycho-acoustics 11 (1972), no. 3, 241-246.

- HJ96 - J. Herre and J.D. Johnston, Enhancing the performance of perceptual audio coders by using temporal noise shaping (tns), 101st AES Convention, Los Angeles, 1996.

- HJ05 - E.E. Hannon and S.P. Johnson, Infants use meter to categorize rhythms and melodies: Implicatiopns for musical structure learning, Cognitive Psychology 50 (2005), 354-377.

- HL04 - W.C. Hsu and H.L. Lai, Effects of music on major depression in psychiatric inpatients, Arch Psychiatr Nurs. 18 (2004), no. 5, 193-199.

- HO03 - E. Hirokawa and H. Ohira, The effects of music listening after a stressful task on immune functions, neuroendocrine responses, and emotional states in college students, J Music Ther 40 (2003), no. 3, 189-211.

- HP60 - E.H. Hess and J.M. Polt, Pupil size as related to interest value of visual stimuli., Science 132 (1960), 349-350.

- HP63 - --- Pupil size in relation to mental activity during simple problem-solving, Science 140 (1963), 1190-1192.

- htt - http://www.trolltech.com/products/qt/index.html, The qt product by trolltech.

- J. 93 - J. Kovacevic and M. Vetterli, Perfect reconstruction filter banks with rational sampling factors, IEEE Transactions on Signal Processing 41 (1993), no. 6.

- J.83 - Blauert J., Spatial hearing, Cambridge MA MIT Press, 1983.

- JF99 - N.A. Jones and T. Field, Massage and music therapies attenuate frontal eeg asymmetry in depressed adolescents., Adolescence 34 (1999), no. 135, 529-534.

- JL03 - P.N. Juslin and P. Laukka, Communication of emotions in vocal expression and music performance: Different channels, same code ?, Psychological Bulletin 129 (2003), 770-814.

- Jus01 - P.N. Juslin, Music and emotion: Theory and research, ch. Communication emotion in music performance: A review and theoretical framework, pp. 309-337, Oxford: Oxford University Press, 2001.

- K.87 - Clifton R. K., Breakdown of echo suppression in the precedence effect, Journal of the Acoustical Society of America 82 (1987), no. 5, 1834-1835.

- Kai96 - Gerald Kaiser, A friendly guide to wavelets, 6th edition ed., Birkhäuser, 1996.

- KB66 - D. Kahneman and J. Beatty, Pupil diameter and load on memory, Science 154 (1966), 1583-1585.

- KB67 - D. Kahneman and Janisse Beatty, Pupillary responses in a pitch-discrimination task, Perception and Psychophysics 2 (1967), 101-104.

- KBR98 - G. Kvale, E. Berg, and M. Raadal, The ability of corah's dental anxiety scale and spielberger's state anxiety inventory to distinguish between fearful and regular norwegian dental patients., Acta Odontol Scand, Department of Clinical Psychology, University of Bergen, Norway 56 (1998), no. 2, 105-109.

- Kiv89 - P. Kivy, Sound sentiment, Tech. report, Philadelphia: Temple University Press, 1989.

- KSP96 - M. Kappelan, B. Strauß, and P.Vary, Flexible nonuniform filter banks using allpass transformation of multiple order, Proceedings 8th European Signal Processing Conference (EUSIPCO'96), 1996, pp. 1745-1748.

- KWM01 - T. Kerr, J. Walsh, and A. Marshall, Emotional change processes in music-assisted reframing, J Music Ther 38 (2001), no. 3, 193-211.

- LA62 - Kinster L.E and Frey A.R., Fundamentals of acoustics, 2nd ed., John Wiley & Sons; New York, 1962.

- LeD96 - Joseph LeDoux, The emotional brain: The mysterious underpinnings of emotional life, Toughstone: Simon & Schuster, 1996.

- LF96 - R.E. Lubow and O. Fein, Pupillary size in response to a visual guilty knowledge test: New technique for the detection of deception, Journal of Experimental Psychology 2 (1996), no. 2, 164-177.

- LG05 - H.L. Lai and M. Good, Music improves sleep quality in older adults., J Adv Nurs. 49 (2005), no. 3, 234-244.

- Loe93 - I.E. Loewenfeld, The pupil: Anatomy, physiology and clinical applications., Detroit: Wayne State University Press, 1993.

- LvdGP66 - W.J.M Levelt, J.P. van de Geer, and R. Plomp, Triadic comparison of musical intervals., British Journal of Mathematical and Statistical Psychology 19 (1966), 163-179.

- LW96 - D. Lester and M. Whipple, Music preference, depression, suicidal preoccupation and personality: comment on stack and gundlack's papers., Suicide Life Threat Behav. 26 (1996), no. 1, 68-70.

- Mak00 - S. Makeig, Music, mind, and brain, ch. Affective versus analytic perception of musical intervals., pp. 227-250, New York; London: Plenum Press, 2000.

- MB82 - T.F. Maher and D.E. Berlyne, Verbal and explanatory responses to melodic musical intervals, Psychology of Music 10 (1982), no. 1, 11-27.

- MBH97 - H.C. Muller-Busch and P. Hoffmann, Active music therapy for chronic pain: a prospective study, Schmerz. 11 (1997), no. 2, 91-100.

- MCS90 - S. Mudd, C.G. Conway, and D.E. Schindler, The eye as music critic: Pupil response and verbal preferences., Studia psychologica 32 (1990), no. 1-2, 23-30.

- MLD71 - D.M. McNair, M. Lorr, and L.F. Droppleman, Manual for the profile of mood states., Tech. report, San Diego, CA: Educational and Industrial Testing Services., 1971.

- Mys05 - A. Myskja, Therapeutic use of music in nursing homes, Tidsskr Nor Laegeforen 125 (2005), no. 11, 1497-1499.

- OL03 - H. Oelmann and B. Laeng, The emotional meaning of muscial intervals: A validation of an ancient indian music theory ?, Poster presented at XIII Conference of the ESCOP, Granada (2003).

- OS89 - Alan V. Oppenheim and Ronald W. Schafer, Discrete-time signal processing, Prentice Hall, 1989.

- OT90 - A. Ortony and T.J. Turner, What's basic about basic emotions?, Psychological Review 97 (1990), 315-331.

- PBP04 - J. Posluszna, A. Burtowy, and R. Palusinski, Music preferences and tobacco smoking, Psychol Rep 94 (2004), no. 1, 240-242.

- PL05 - H.G. Paulsen and Bruno Laeng, Pupillometry of grapheme-color synaesthesia, Cortex, paper in press, published online, retrieved 1st of June 2005, from http://uit.no/getfile.php?SiteId=89&PageId=1935&FileId=363 (2005).

- Plu74 - R. Plutchik, Emotions profile index - manual, Tech. report, California: Western Psychological Services, 1974.

- Plu80 - --- Emotion: Theory, research, and experience: Vol 1. theories of emotion, ch. A general psychoevolutionary theory of emotion, pp. 3-33, New York: Academic, 1980.

- PM86 - Peterson and Patrick M., Simulating the response of multiple microphones to a single acoustic source in a reverberant room, The Journal of the Acoustical Society of America 80 (1986), 1527-1529.

- Pra70 - R.W. Pratt, Cognitive processing of uncertainty: Its effect on pupillary dilation and preference ratings., Perception and Psychophysics 8 (1970), no. 4, 193-198.

- PS03 - T. Partala and V. Surakka, Pupil size variation as an indication of affective processing, International Journal of Human-Computer Studies 59 (2003), no. 1-2, 185-198.

- RA04 - R.A. Richell and M. Anderson, Reproducibility of negative mood induction: a self-referent plus musical mood induction procedure and a controllable/uncontrollable stress paradigm., J Psychopharmacol. 18 (2004), no. 1, 94-101.

- RSV01 - M. Risch, H. Scherg, and R. Verres, Music therapy for chronic headaches. evaluation of music therapeutic groups for patients suffering from chronic headaches, Schmerz 15 (2001), no. 2, 116-125.

- SA04 - W. Schmid and D. Aldridge, Active music therapy in the treatment of multiple sclerosis patients: a matched control study., J Music Ther. 41 (2004), no. 3, 225-240.

- San04 - A. Portera Sanchez, Music as a symptom, An R Acad Nac Med (Madr). 121 (2004), no. 3, 501-513.

- SC95 - J. Stutz and P. Cheeseman, Maximum entropy and bayesian methods, cambridge 1994, ch. AutoClass - a Bayesian Approach to Classification, Kluwer Acedemic Publishers, Dordrecht, 1995.

- SD98 - Scheirer and Eric. D, Tempoi and beat analysis of acoustic musical signals, Journal of the Acoustical Society of America 1 (1998), no. 103, 588.

- Sim96 - S.G. Simpson, As cited in w.leary, as fellow traveler of other illnesses, depression often goes in disguise., New York Times (1996).

- SSRB03 - M. Snowden, K. Sato, and P. Roy-Byrne, Assessment and treatment of nursing home residents with depression or behavioral symptoms associated with dementia: a review of the literature., J Am Geriatr Soc 51 (2003), no. 9, 1305-1317.

- SW99 - L.D Smith and R.N. Williams, Children's artistic responses to musical intervals., American Journal of Psychology 112 (1999), no. 3, 383-410.

- SZ89 - V. N. Stratton and A. H. Zalanowski, The effects of music and paintings on mood, Journal of Music Therapy 26 (1989), no. 1, 30-41.

- TFHR+03 - A. Tornek, T. Field, M. Hernandez-Reif, M. Diego, and N. Jones, Music effects on eeg in intrusive and withdrawn mothers with depressive symptoms, Psychiatry 66 (2003), no. 3, 234-243.

- Tha95 - Robert Thayer, Beating the blahs, Psychology Today (1995).

- UH03 - Christian Uhle and Juergen Herre, Estimation of tempo, micro time and time signature from percussive music, Proceedings of the 6th International Conference on Digital Audio Effects (DAFX-03), London, UK (2003).

- WB89 - Hartmann W.M. and Rakerd B, Localization of sound in rooms iv: The franssen effect, Journal of the Acoustical Society of America 86 (1989), no. 4, 1366-1373.

- Y.85 - Ando Y., Concert hall acoustics, Springer-Verlag, 1985.

- YKT+95 - Yamada, Kimura,

Tomohiko, Funada, Takeaki, Inoshita, and Gen, Apparatus

for detecting the number of beats, US Patent Nr

5,614,687 (1995).

| http://werner.yellowcouch.org/ werner@yellowcouch.org |  |

![\includegraphics[width=1\textwidth,height=4.5cm]{GanntHelseOmsorg}](img2.png)