Next: IV. Validation Up: III. Solution Previous: 9. Module 2: The Contents

UNTIL NOW WE HAVE ADDRESSED two sub-problems. These are a) the problem of enforcing an action on a component in such a way that the concurrency strategy is bypassed and b) the problem of learning how a component is best kept alive. The last module, which we will now present, is necessary to control access to resources by multiple clients.

Firstly we will explain how we can deduce which resources are involved, secondly we will explain what exactly the problem is we want to solve. Thirdly we will explain when a component has control over a certain resource. Fourthly, we will explain how our concurrency module can exert control over the resources involved. And finally we explain how race-conditions can be avoided.

BEFORE WE CAN EXPLAIN how we can manage access to the different resources we need to define which resources we will consider.

A resource in our case is defined as all the ![]() transitions

that can be found in the elementary net, expanded from the colored

Petri-net. Every such a transition is a resource in its own right.

This approach to resources in a Petri-net is good enough for our case.

Clearly the real resources will have a much larger granularity than

the definition we impose here. For instance a resource in our whiteboard

is at least the size of a square. If we consider resources to be the

state of transitions then we have 4 times as many resources: a setPosition(1,1),

returnSetPosition(1,1), getPosition(50,70) and

returnGetPosition(14,3) for every square. However this

is an advantage because it allows for a greater flexibility in aligning

the resources. For instance, with our definition of resources one

can specify that square

transitions

that can be found in the elementary net, expanded from the colored

Petri-net. Every such a transition is a resource in its own right.

This approach to resources in a Petri-net is good enough for our case.

Clearly the real resources will have a much larger granularity than

the definition we impose here. For instance a resource in our whiteboard

is at least the size of a square. If we consider resources to be the

state of transitions then we have 4 times as many resources: a setPosition(1,1),

returnSetPosition(1,1), getPosition(50,70) and

returnGetPosition(14,3) for every square. However this

is an advantage because it allows for a greater flexibility in aligning

the resources. For instance, with our definition of resources one

can specify that square ![]() is locked for all operations and that

square

is locked for all operations and that

square ![]() is locked for the read-operations. Furthermore, this

definition of resources is also complete in the sense that no resource

can be found that cannot be expressed based on the state (enabled

or disabled) of transitions. This definition can be extended to include

the state of

is locked for the read-operations. Furthermore, this

definition of resources is also complete in the sense that no resource

can be found that cannot be expressed based on the state (enabled

or disabled) of transitions. This definition can be extended to include

the state of ![]() places of the core functionality. However we

will continue to work with only the transitions.

places of the core functionality. However we

will continue to work with only the transitions.

A resource within our Petri-nets is defined as every possible logic transition in the elementary Petri-net expanded from the colored Petri-net.

With this definition of resources, we will now investigate what kind of race conditions can occur, and why we need to manage this.

THE NO-CONFLICT REQUIREMENT (given in section 7.2.1

on page ![[*]](crossref.png) ) states that no action requested

by the client should at any moment be impossible on the server. Simply

by connecting the liveness-module to the enforce-action module we

can easily guarantee this. However, if we do so, the no-race requirement

is not satisfied. Below we illustrate why.

) states that no action requested

by the client should at any moment be impossible on the server. Simply

by connecting the liveness-module to the enforce-action module we

can easily guarantee this. However, if we do so, the no-race requirement

is not satisfied. Below we illustrate why.

![\includegraphics[%

height=12cm]{RaceCondition.eps}](img401.png)

|

The reason why connecting the liveness module to the enforce-action

module does not work is that we effectively remove any concurrency

strategy on the server. As such, a client can execute any server action

at any time it wants. This allows for a greater freedom, and effectively

avoids the conflict. However, the server can no longer guarantee that

a transition that is enabled for the first client is not reassigned

to another client during execution. E.g., it is possible for an adaptor

to unlock a resource locked by ![]() , lock the resource for component

, lock the resource for component

![]() , unlock the resource for component

, unlock the resource for component ![]() , and finally lock it

again for component

, and finally lock it

again for component ![]() . Here a concurrency problem will occur because

component

. Here a concurrency problem will occur because

component ![]() expected the resource to be locked the whole time,

while in practice another component was able to change the state of

the given resource. This is pictured in figure 10.2.

expected the resource to be locked the whole time,

while in practice another component was able to change the state of

the given resource. This is pictured in figure 10.2.

To avoid this we should no longer only look at the fact whether a transition is enabled or disabled. The real problem lies with the change of enabled to disabled transitions not with the state they are in. If we want to make a concurrency module that avoids race conditions the concurrency module needs to understand when which component can change the 'enabledness' of a transition, or in other words when which component has control over which resource.

IN THIS SECTION WE INVESTIGATE how resources can be controlled by the different clients. A resource is (as stated earlier) defined as the transitions within the expanded Petri-net. When a process has control over a certain resource it means that the process can access that resource and use it, or modify its state. In our case, we can easily define control over a resource as the ability to execute a transition, or modify the state of the transition. However, not all transitions of a Petri-net can be controlled by the underlying component. Most outgoing messages (messages such as Lock, Read, Write, Unlock) can be under control of the underlying component, however the incoming messages (messages such as LockDone, ReadDone, WriteDone) are typically not under control of the client component. This approach can also be found back in [MA97].

A transition is under control of a client component if it can be executed

immediately or its state can be modified directly or indirectly

through using ![]() messages.

messages.

Because the ![]() and

and ![]()

![]() messages can be sent

(or involuntarily) received, they take part in the process of verifying

whether the state of a transition can be changed. Once an

messages can be sent

(or involuntarily) received, they take part in the process of verifying

whether the state of a transition can be changed. Once an ![]()

![]() message is sent out, the concurrency module will simply pass

it through to the server component. If an

message is sent out, the concurrency module will simply pass

it through to the server component. If an ![]()

![]() message

comes back from the server, this message is again passed through to

the client component. On the other hand,

message

comes back from the server, this message is again passed through to

the client component. On the other hand, ![]()

![]() messages

can be sent out by the client component, but do not need to be passed

through and offer the concurrency adaptor a choice where it can choose

which enabled

messages

can be sent out by the client component, but do not need to be passed

through and offer the concurrency adaptor a choice where it can choose

which enabled ![]()

![]() message to send back. For instance,

such a choice can be the request for a lock. The concurrency adaptor

has the choice to accept or reject the lock by sending back a LockTrue

or LockFalse message. However, both choices will result

in different enabled/disabled

message to send back. For instance,

such a choice can be the request for a lock. The concurrency adaptor

has the choice to accept or reject the lock by sending back a LockTrue

or LockFalse message. However, both choices will result

in different enabled/disabled ![]() transitions.

transitions.

Once a LockTrue is sent back the client component can choose to execute a Read or Write, thus the read and write transitions are under control of the client component. The messages that can be sent back by the server component are readDone and writeDone, thus indirectly under control of the client-component. Given a Petri-net and a corresponding marking, this controlled-by information can be calculated automatically by means of a reachability analysis, similar to the one described in section 8.5.

BEFORE INVESTIGATING HOW WE CAN SATISFY the no-race requirement we need to know what kind of actions can be taken by the concurrency module.

It is clear that the concurrency module can not simply insert new

![]() transitions, because this would alter the runtime

behavior of the system. However, it should have the possibility to

insert

transitions, because this would alter the runtime

behavior of the system. However, it should have the possibility to

insert ![]() transitions at certain places. However, inserting

this kind of messages is already done by the enforce-action module

and the keep-alive module. Nevertheless, the link between the concurrency

module and the enforce-action/keep-alive module is still not fully

explained.

transitions at certain places. However, inserting

this kind of messages is already done by the enforce-action module

and the keep-alive module. Nevertheless, the link between the concurrency

module and the enforce-action/keep-alive module is still not fully

explained.

Therefore, to be able to let the concurrency-adaptor choose a certain action, the keep-alive-adaptor will supply a set of possible futures, sorted according to their priority. This will allow the concurrency-adaptor to first try to reach a certain target future and acknowledge that future. If such a future cannot be reached without breaking the no-race requirement, another future will be tried until a suitable one is found. If no possible future can be realized the component will be set to wait until such a future can be realized.

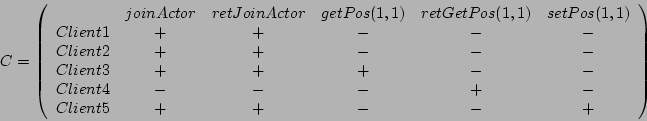

From now on we will assume that the choices offered by the keep-alive

module contains information as how to select a certain future and

control-information that describes which states become under control

of the given component when that future is selected. If a transition

comes under control of the component we will mark it with a '+'. If

a transition does not come under the control of the component we mark

it with a '-', otherwise, if it is undecided we mark the transition

with an '?'. We will write this down as an ![]() matrix, where

matrix, where

![]() is the number of

is the number of ![]() transitions in the exploded colored

Petri-net. For instance

transitions in the exploded colored

Petri-net. For instance

Transitions for which it is undecided what the future will bring, will be considered to be under control of the client component.

GIVEN THE DEFINITION OF RESOURCES and the possibility to choose certain futures that extend different control over the resources, we can now explain how the concurrency module avoids colliding critical sections.

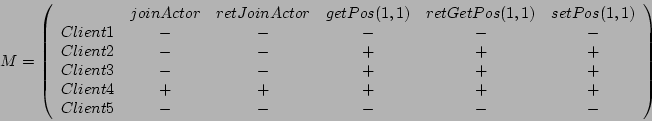

We will do this by means of two matrices. The first matrix, called

the control-matrix is of size ![]() where

where ![]() is the number

of resources available and

is the number

of resources available and ![]() is the number of clients available.

On this matrix the rows contains the clients. On the columns the transitions

(resources) will be placed. If a resource is under control of a client

a

is the number of clients available.

On this matrix the rows contains the clients. On the columns the transitions

(resources) will be placed. If a resource is under control of a client

a ![]() is placed in the matrix, otherwise a

is placed in the matrix, otherwise a ![]() .

.

The second matrix has the same layout but marks whether a certain

resource is managed or not by the concurrency module. This is necessary

for two reasons. First, a resource can always be enabled by different

components (a resource like joinActor for instance) and

second, sometimes certain clients don't have a concurrency strategy

at all. It is clearly impossible to manage concurrency for a client

that does not specify any concurrency strategy, or for resources that

do not take part in a concurrency strategy. Therefore, the concurrency

module needs to keep track of the resources and clients it can and

will manage. If a resource/client is managed it is marked with a ![]() ,

otherwise it is marked with a

,

otherwise it is marked with a ![]() .

.

The above example of the control matrix ![]() and the managed matrix

and the managed matrix

![]() illustrates the difference between both concepts. For instance

column one and two can be a joinActor and a returnJoinActor,

For all clients (except the fourth), this joinActor and

returnJoinActor are under control of the client because

the clients can choose to execute that transition at any time. However,

the fourth actor, is unable to extend control over the joinActor

because it must (for example) first call a joinLocking

first. However, after the first joinLocking message, the

concurrency module will recognize that the joinActor messages

are effectively managed by the client component, hence the

illustrates the difference between both concepts. For instance

column one and two can be a joinActor and a returnJoinActor,

For all clients (except the fourth), this joinActor and

returnJoinActor are under control of the client because

the clients can choose to execute that transition at any time. However,

the fourth actor, is unable to extend control over the joinActor

because it must (for example) first call a joinLocking

first. However, after the first joinLocking message, the

concurrency module will recognize that the joinActor messages

are effectively managed by the client component, hence the ![]() matrix

marks these resources and clients with a true. (

matrix

marks these resources and clients with a true. (![]() ).

).

The columns 3,4 and 5 are the separate operations on all the squares

off the board. The first and second client currently extends no control

over the resources, however, only the second client actually makes

use of a concurrency strategy for those resources (![]() ).

The fifth client on those resource has currently control over the

last resource

).

The fifth client on those resource has currently control over the

last resource ![]() , however, this control is unmanaged by the

concurrency module

, however, this control is unmanaged by the

concurrency module ![]() and as such must be treated differently

when realizing a certain future.

and as such must be treated differently

when realizing a certain future.

Therefore, we will now explain how ![]() and

and ![]() messages

are handled by the concurrency module.

messages

are handled by the concurrency module.

The concurrency module handles incoming ![]() requests by verifying

whether the message is allowed for that component, given the current

situation. If an action is allowed it can be passed through to the

server component. If an action is not allowed, the concurrency module

has no option but to place the action in a queue and wait until a

suitable configuration of the other components arises to send the

message through. An action can be allowed for two reasons. Firstly,

it can be allowed because the action itself is managed by nobody,

or the clients managing the resource currently does not extend any

control over the resource. Secondly, an action can be allowed because

it concerns a resource that is managed by the client-component and

is controlled by the client. Formally:

requests by verifying

whether the message is allowed for that component, given the current

situation. If an action is allowed it can be passed through to the

server component. If an action is not allowed, the concurrency module

has no option but to place the action in a queue and wait until a

suitable configuration of the other components arises to send the

message through. An action can be allowed for two reasons. Firstly,

it can be allowed because the action itself is managed by nobody,

or the clients managing the resource currently does not extend any

control over the resource. Secondly, an action can be allowed because

it concerns a resource that is managed by the client-component and

is controlled by the client. Formally:

A request ![]() coming from client

coming from client ![]() is allowed

is allowed ![]()

A second kind of messages that should be handled by the concurrency

module are requests to choose a certain future. These requests come

from the client component only if there are multiple futures to choose

from. The responsibility of the concurrency module is to choose a

correct future that does not collide with the existing configuration

of other clients. Therefore the ![]() and

and ![]() matrices will be used.

However, three things need to be discussed. First we need to discuss

how we can detect whether a resource is managed, second we will discuss

when a future can be realized and third we will discuss what happens

when such a future is realized.

matrices will be used.

However, three things need to be discussed. First we need to discuss

how we can detect whether a resource is managed, second we will discuss

when a future can be realized and third we will discuss what happens

when such a future is realized.

In the discussion below we assume that a client with identification

![]() has proposed a number of futures

has proposed a number of futures

![]() . As already

explained, a future is specified as a

. As already

explained, a future is specified as a ![]() matrix, where

matrix, where ![]() is the number of resources involved.

is the number of resources involved.

A resource is managed at the moment a client component offers two

different futures for that resource and enables the concurrency module

to choose between both. Therefore, we will update the ![]() matrix

as follows

matrix

as follows

The above line states that a resource is managed if different futures have different control over the resource.

Given this information, we can now verify whether a certain future

![]() can be realized for client

can be realized for client ![]() . In general, a future

. In general, a future ![]() can

be realized if its resources can be realized. A resource

can

be realized if its resources can be realized. A resource ![]() can

be realized only if it realization does not block out all clients

(including itself) to use the resource. To understand better how such

a situation might occur, we investigate all the possible tracks. We

do this by only looking at one resource

can

be realized only if it realization does not block out all clients

(including itself) to use the resource. To understand better how such

a situation might occur, we investigate all the possible tracks. We

do this by only looking at one resource ![]() . If there exists one

offending resource (in

. If there exists one

offending resource (in ![]() ) then the entire future is not realizable.

) then the entire future is not realizable.

Intuitively, when future ![]() specifies that it does not want

to control resource

specifies that it does not want

to control resource ![]() , then client

, then client ![]() will not prohibit any

other client from using the resource. This can be seen in equation

10.1. If

will not prohibit any

other client from using the resource. This can be seen in equation

10.1. If ![]() is false, then the term

is false, then the term

![]() will be true, hence not blocking other

clients from using

will be true, hence not blocking other

clients from using ![]() . On the other hand, if

. On the other hand, if ![]() wants to control

resource

wants to control

resource ![]() then we must look at the state of the other clients.

We now look for a client (denoted

then we must look at the state of the other clients.

We now look for a client (denoted ![]() ) that might give rise to a

situation that locks out all clients.

) that might give rise to a

situation that locks out all clients.

One the concurrency module has determined which futures can be realized,

it will choose one to realize. Realizing a future is easy. The only

thing we need to do is copy ![]() to the correct place in the matrix

to the correct place in the matrix

![]() . Thus:

. Thus:

If no future can be realized the request is placed in a queue until it can be realized.

BELOW WE DISCUSS THIS APPROACH. We investigate how certain clients behave when mediated by means of this module. We will explain that our module in most cases behaves as expected, however in certain special cases it will fail to work.

The first observation about this module is that the concurrency strategy of the server does not matter ! The enforce-action module uses the concurrency strategy only to bring the server in a required state. The fact that we no longer need to take into account the offered concurrency strategy is important for two reasons. First, it is a strong result because not a single server component in such an automatically mediated conversation needs to offer a concurrency strategy. Second, it allows us to look only at the indirect conflicts between different client components that want to access the same server. This is what we will do now.

| client 1 | client 2 | client 3 | without adaptor | with adaptor

unmanaged |

We return to our conflict cases and investigate how our adaptor might improve the communication between multiple clients and a server. To do so, we first observe the behavior of a set of clients with no concurrency strategy. Afterward we observe the same set of clients for which only one requires a concurrency strategy.

If no client involved in a communication session specifies a concurrency strategy then the adaptor will simply pass through all requests. To understand this, we point out that, if no concurrency strategy is introduced, then no resource is managed, hence we end up in branch 2b (section 10.5.2). By passing simply passing through any request, the module will not avoiding race-conditions. Hence, the adaptor will mediate the concurrency conflict and will still exhibit the implicitly required behavior, that is, not to manage concurrency.

On the other hand, if one of the connected clients offers a concurrency strategy, all the unmanaged clients might need to wait until the concurrency managing client has finished its operations. To understand this we point out that a concurrency strategy will lead to managed and controlled resources. When one of those is present, all other clients will set to wait until the one managing its resources has finished.

This strategy clearly improves the working of the system. If the adaptor would simply mediate the differences, the unmanaged concurrency clients might access the server while the managed concurrency strategy is supposed to access resources exclusively. This observation shows how our adaptor might not only mediate, but also improve the concurrency behavior.

| client 1 | client 2 | client 3 | without adaptor | with adaptor

rollback |

The presented concurrency module does not care about livelocks. For instance, if none of the involved components takes the possibility of starvation into account, then the resulting behavior may exhibit a behavior that will lock out a certain client. However, it is possible to insert a learning algorithm in the concurrency module that will learn how to avoid livelocks and learns which timing to apply to keep all involved components alive. Because this behavior was not part of the required interface, this is clearly an improvement. However, improving the working of the whole is not always be possible, such as in the case for deadlocks.

| client 1 | client 2 | client 3 | without adaptor | with adaptor

waiting |

If the involved components require a concurrency strategy which is deadlock-prone, such as a waiting square locking strategy, then the adaptor will not avoid deadlocks. The adaptor will still mediate the differences in syntax, control-flow, re-entrance, granularity conflicts and other variabilities. This shows that the adaptor working as required, however it will not resolve deadlocks automatically. It would be nice to have an adaptor that not only mediates the differences but also avoids any concurrency problems. In section 13.8.6 we will briefly explain how we could approach this. However, our adaptor will be able to detect a deadlock if one might arise.

| client 1 | client 2 | client 3 | without adaptor | with adaptor

staircasing |

Another observation we can make, is that certain implicit requirements

cannot be detected, thus not mediated. Sometimes deadlocks can be

avoided by cooperating clients if they apply a staircasing technique

(see section 5.8.3 on page ![[*]](crossref.png) ). If

the clients apply such a technique then the adaptor mediates the differences

and works as expected, thus avoid deadlocks. However, if another client,

one that does not honor this implicit requirement, joins, then the

whole conversation might deadlock. In section 13.5

we come back to this issue.

). If

the clients apply such a technique then the adaptor mediates the differences

and works as expected, thus avoid deadlocks. However, if another client,

one that does not honor this implicit requirement, joins, then the

whole conversation might deadlock. In section 13.5

we come back to this issue.

![\includegraphics[%

width=1.0\textwidth]{Actor9.eps}](img430.png)

|

The only case where the concurrency module does not behave as expected

is when certain resources are 'virtual', in the sense that they cannot

be deduced from the Petri-net. Such a situation occurs for instance

in conflict 6.12 (page ![[*]](crossref.png) ).

In this conflict the client requires the possibility to first lock

the entire field. However, the Petri-net involved (picture 10.3)

does not have any logic transition that indicates that the

entire server should be considered to be a resource. Specifically,

it is possible that the client requests to enter the server.

This forms two problems. First, because the liveness module has no

choice but return enterOk. Second, because if this future

is involuntarily realized, none of the resources come under control

of the client component. In this case the concurrency adaptor fails

to work as expected. However, if the enter and leave

transitions would be coupled to real logic transitions, then

this would not form a problem.

).

In this conflict the client requires the possibility to first lock

the entire field. However, the Petri-net involved (picture 10.3)

does not have any logic transition that indicates that the

entire server should be considered to be a resource. Specifically,

it is possible that the client requests to enter the server.

This forms two problems. First, because the liveness module has no

choice but return enterOk. Second, because if this future

is involuntarily realized, none of the resources come under control

of the client component. In this case the concurrency adaptor fails

to work as expected. However, if the enter and leave

transitions would be coupled to real logic transitions, then

this would not form a problem.

From these results we can conclude, that if we present our adaptor with a set of concurrency strategies the result will work as expected and mediate the conflict correctly. On the other hand, if the input contains virtual resources or contains implicit requirements, the resulting behavior might be incorrect by introducing deadlocks. However, in every case, the adaptor will still mediate the conflict.

One of the implementation issues we encountered is a problem of performance.

We define a resource as a transition within the expanded elementary

Petri-net. This means that for a simple ![]() whiteboard,

the expanded Petri-net will offer around

whiteboard,

the expanded Petri-net will offer around

![]() resources.

Creating this expanded Petri-net takes a long time if not optimized.

resources.

Creating this expanded Petri-net takes a long time if not optimized.

Also, because the liveness module need to specify which resources they will control, they have to transmit relatively much information. To optimize this only change sets could be used. Also, certain resources are similar. If an act can be done on a resource, then a getPosition is also always possible. Deducing this information might help in optimizing this module, it might also help in better defining what a resource exactly is, given the Petri-net.

IN THIS CHAPTER WE HAVE EXPLAINED how we can easily create a module that avoids race-condition on the server. Firstly we explained which resources we consider. Secondly, we explained the requirement to avoid race conditions. Thirdly, we explained when a component controls a certain resource. This was necessary to be able to specify when a resource is involved in a critical section. Fourthly, we explained how our concurrency module is able to control the behavior of the clients involved and how it manages to avoid race conditions.