Next: 12. Discussion Up: IV. Validation Previous: IV. Validation Contents

IN THIS DISSERTATION, until now, we have explained how we can create a on-line working concurrency adaptor. This adaptor, however has not been created over one night. A number of different kinds of experiments has been performed. These experiments have highly influenced the current construction of the adaptor. Therefore, we feel it is necessary to explain these experiments in more detail. In this chapter we will give an overview of them. In the following chapter we will discuss the adaptor we have presented in this dissertation.

![\includegraphics[%

width=1.0\textwidth]{ExperimentsTraces.eps}](img433.png)

|

PICTURE

Below we will briefly summarize every experiment we have conducted. Initially we tried to create one adaptor to solve the concurrency strategy conflicts. However, after some experiments we took the decision to leave the track of off-line generated adaptors and focused on the practical usability of such an adaptor, hence we tried to make it work on-line. This posed some problems, which forced us to divide the adaptor in different modules as explained in chapter 7. The details of all experiments can be found on-line at http://progpc26.vub.ac.be/ cgi-bin/seescoacvs/component/concurrency/.

DURING THE EXPERIMENTS WE HAVE WORKED with two kinds of approaches. One approach was an off-line approach in which components are supposed to offer a test-component (pictured in figure 11.2). In such a setup, the learning algorithm has the opportunity to make wrong decisions which brings one of the participating components in an invalid and irreparable state. The second approach is an on-line approach in which a learning algorithm, or a learned algorithm, was supposed to work without failure. From both approaches, clearly the second is most realistic, because it can hardly be expected that in an open distributed system a component will offer 'test'-components, nor should any adaptor bring a component in an invalid state. However, as we have already explained in section 4.3.1, the off-line genetic approach offered some valuable insights into the representational problems we faced.

AS EXPLAINED IN CHAPTER

Measuring the correctness of a generated adaptor is not at all easy because it can be a Turing complete process, which means that we actually have to try it and cannot even see when it has stopped, or is executing in a loop and doing something useful. If we would have enough processing power available we could try testing all adaptors in parallel to each other. If we assume that all concurrent processes run equally rapidly we could measure every generated adaptor over a virtually infinite time span. All we would need to do is test all individuals concurrently and summing up the rewards as they are received. When a certain adaptor has become the worst it is killed and replaced by a new adaptor. However, this way of working has a major drawback: it is immensely resource consuming, both computationally and with respect to the memory requirements. [DK96,GW93] Therefore we will need to fall back to another approach.

To solve this problem of measuring a possible Turing complete process we introduce the notion of episodes. (such as in section 4.4.2). An episode is a finite run of a certain adaptor, that is an execution with a limited number of messages that should be handled. The amount of fitness received after this finite time is the reward for that episode. At the beginning of an episode the fitness is always zero (so the fitness of an adaptor does not take into account previous runs of the adaptor.). If at the arrival of a certain message the Petri-net of the adaptor goes into a loop, we terminate it after 2 seconds11.3 and assign the current accumulated rewards as a fitness. If handling a message takes longer than 2 seconds, the component is killed. This technique of working is well known when working with learning algorithms, however often evaluation-steps are measured instead of real time. [Koz92]

The running time for every episode is increased slowly as the number of generations increases. By doing so we not only solve the resource problem of testing all algorithms at the same time, but we increase a number of interesting properties:

Goal: Creation of one adaptor program between two conflicting concurrency strategies.

Means: Genetic Programming with Scheme as a programming language.

Deployment: off-line

Input: No Petri-net description of the conflicting concurrency strategies is present.

Output: Scheme program that is supposed to mediate two conflicting concurrency strategies.

In this small experiment we tested how a Scheme program [SJ75] could be generated automatically that would solve the conflict of a nested locking strategy at one side and a non-nested locking strategy at the other side. Essentially, this is a very easy problem to solve for an adaptor. The only thing it has to do is keep track of a counter, which specifies how many times the client has already locked the resource at the server.

The operations of the genetic algorithm are scheme operations such as +, -, /, *, >, <, <=, >=, and, or, not, sendmessage, set!, define, if and handlemessage. The smallest solution we are looking for is given in algorithm 26. In the program, the first argument given to sendmessage is the port to which to send the message to.

From this simple experiment we observed the following:

|

Goal: Create a concurrency adaptor between two conflicting concurrency strategies

Means: The Pittsburgh Approach to implement a genetic programming approach

Deployment: off-line

Input: Petri-net descriptions of two conflicting concurrency strategies & distributed fitness measure

Output: Classifier system to fill in the missing behavior

Classifier systems (as described in section 4.3.2) are a well known technique to create algorithms by means of a genetic program. Because the Scheme representation suffered from too many problems, we changed our approach substantially. First we introduced a formal interface description under the form of Petri-nets, second we left the track of commonly used languages and investigated the use of classifier systems. In these experiments we were using the previous example of a non-nested versus a nested locking strategy. Both strategies offer simple enter/leave locking semantics. For a quick overview of the parameters of our genetic program we refer to table 11.1.

The goal of this experiment was to create a classifier system automatically (the Pittsburgh approach) that would mediate the differences between two conflicting concurrency strategies. The classifier system should reason about the actions to be performed based on the available input. Therefore we need to represent the external environment in some way and submit it to the classifier input interface. We have investigated the use of two different approaches to represent the external environment.

We now describe the semantics of a single message reactive classifier system.

The input of a classifier consists of the full state of the client and the full state of the server, together with the actions which are required by either one of them. So, at first sight we should create messages which contain the state in which the Petri-net is in and the action requested by the client or the server. Of course, this would allow our classifier system to take invalid actions in a certain context. The classifier could for example decide to send an act message to the server when the server wasn't even locked. To avoid this we decided to represent the client/server state by means of the possible actions either one of them can perform. We can easily obtain these because we have a Petri-net model that describes the behavior of all connected components.

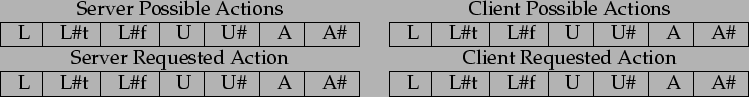

In the case of simple enter/leave locking semantics, we define the semantics of our messages as a 28 bit tuple (shown in figure 11.3), where the first 7 bits are the possible server actions, the next 7 bits represent the possible client actions, the next 7 bits represent the requested server action and the last 7 represent the requested client action.

|

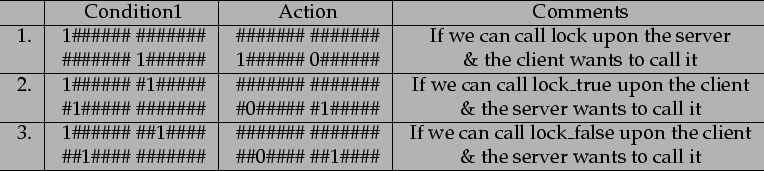

With these semantics for the messages, simply translating a lock from client to server requires three rules (see figure 11.4). One which transforms the client lock request into a server-lock call (rule 1) and two which transform a server lock return into a client lock return. (rule 2 and 3).

|

Although this is a simple example, more difficult actions can be represented. Suppose we are in a situation where the client uses a nested-locking strategy and the server uses a non-nested locking strategy. In such a situation we don't want to send out the lock-request to the server if the lock count is larger than zero. Figure 11.5 shows how we can represent such a behavior.

We now describe the semantics of a multi message reactive classifier system.

The message that resides in the input interface of such a classifier system contains a prefix that specifies what kind of message it is. When 000, the classifier message is about an incoming action from the client, 001 is an incoming action from the server, 011 is an outgoing action to the server and 010 is an outgoing action to the client. The prefix 10 describes the state of the client and 11 describes the state of the server.

The rules of such a classifier system consist of a ternary string representation of the client state and server state (as specified by the Petri-net), as well as a ternary string representing the requested Petri-net transition from either the client process or the server process. With these semantics for the classifier rules, translating a request from the client to the server requires only one rule. Another rule is needed to translate requests from the server to the client (see table 11.2).

The number of bits needed to represent the states of each Petri-net depends on the number of states in the Petri-net as well as the variables that are stored in the Petri-net (e.g., the LockCount variable requires 2 bits if we want to store 4 levels of locking).

| |||||

Although this is a simple example, more difficult actions can be represented. Consider the situation where the client uses a counting-semaphores locking strategy and the server uses a binary-semaphores locking strategy. In such a situation we don't want to send out the lock-request to the server if the lock count is larger than zero. Table 11.3 shows how we can represent such a behavior.

| |||||

In this experiment, which is an offline experiment, two components, with accompanying Petri-net descriptions of their behavior were offered to a genetic algorithm. The genetic algorithm would create complete classifier systems to link the two Petri-nets together. Initially, a single message reactive system was used, but as it turned out this classifier system was only able to learn very local behavior11.4, therefore a multi-message reactive system has been tested. For the single reactive system, we will now define what our individuals are, how crossover and mutation happens and what we do to measure the fitness. These choices are standard choices for classifier systems.

Individuals are initially empty. Every time a certain situation arises and there is no matching gene we will add a new gene. This gene, which is a classifier rule has a matching condition with a random action on the server and/or the client from the list of possible actions. This guarantees that we don't start with completely useless rules which cover situations which will never exist and allows us to generate smaller genes which can be checked faster.

Mutating individuals is done by randomly adapting a number of genes. A gene is adapted by selecting a new random action.

To create a crossover of individuals we iterate over both classifier-lists and each time we decide whether we keep the rule or throw it away.

Fitness is measured by means of a number of test-sets (which has already been discussed in section 13.8.2). As explained, every test-set illustrates a typical behavior (scenario) the client will request from the server.

The genetic programming uses a steady-state genetic algorithm, with a ranking selection criterion: to compute a new generation of individuals, we keep (reproduce) 10% of the individuals with the best fitness. We discard 10% of the worst individuals (not fit enough) and add cross-overs from the 10% best group11.5. It should be noted that the individuals that take part in cross-over are never mutated. The remaining 80% of individuals are mutated, which means that the genes of each individual are changed at random: for every rule, a new arbitrary action to be performed on the server or client is chosen. On top of this, in 50% of the classifier rules, one bit of the client and server state representations is generalized by replacing it with a #. This allows the genetic program to find solutions for problems that have not themselves presented yet.

The scenarios offered by the client are the ones that determine what kind of classifier system is generated. We have tried this with the test scenarios, as illustrated in figure 13.6. Scenario 1 is a sequence: [lock(), act(), unlock()]. In all scenarios, we issue the same list of messages three times to ensure that the resource is unlocked after the last unlock operation.

|

An examination of the results of several runs of our genetic programming algorithm lead to the following observations:

Goal: Create a concurrency adaptor between two conflicting concurrency strategies

Means: Genetic Programming using Petri-nets as a representation

Deployment: off-line

Input: Petri-net description of two conflicting interface & a distributed fitness measure

Output: Petri-net that links the different interfaces

In this experiment, which is again an off-line experiment, another representation was chosen. To avoid the problems of the classifier systems, we now create a high level Petri-net description that will be measured at runtime. The runtime measurement is still distributed and contains two parts. On the server part, it keeps track of how many errors occurs and warnings that happened. On the client side the fitness is measured at how many locks could be obtained. The representation itself is already detailed in section 9.1.

|

With respect to the before mentioned experiments two things became clear. First: we have found a suitable representation to express adaptors, however this representation does not easily produce concurrency solvers. Second, concurrency problems can barely be measured, therefore we started investigating how we can modularize the adaptor. To this end, we have split the behavior of the adaptor in three separate modules, as described in chapter 7.

Experiment: Automatic deduction of reachability analysis

Means: prolog conversion from Petri-net description and prolog analysis

Deployment: off-line

Input: Petri-net description in prolog and a required marking.

Output: A transition to execute to come closer to this marking.

Description: The description of this experiment is entirely contained in chapter 8.

Results: We have tested the working of this adaptor with relative markings and with absolute markings. To obtain absolute markings, we ran a number of test-components which produced an absolute marking from a real-life situation, afterward the prolog analyzer needed to find out how to enable that specific situation. In all cases the results were almost immediately known.

Experiment: Module to keep a client-component alive

Means: Classifier systems, the BBA and the earlier developed Petri-net Representation

Deployment: on-line

Input: Petri-net description of an interface, check-pointed component

Output: New transitions that will compete to keep the client component alive

Results:

Experiment: Module to keep a client component alive

Means: Reinforcement learning, together with the earlier presented Petri-net representation

Deployment: on-line

Input: Petri-net of the interface, check-pointed component

Output: New transitions that will compete to keep the client component alive

Description: In the experiment, we use a number

of different components, with different checkpoints, that will assign

rewards as certain checkpoints are met (see section 9.2,

page ![[*]](crossref.png) ). We will not document where

these checkpoints are set due to space considerations.

). We will not document where

these checkpoints are set due to space considerations.

Results:

The implementation of all these experiments, together with the results are available online. We didn't put them in appendices because this would require around 400 extra pages.

Runtime; Location: http://borg.rave.org/cgi-bin/seescoacvs/component/system/*

Runtime; Files: Component.component,

ComponentSystem.component, AbstractPort.java, AbstractMultiport.java,

AbstractPortCreator.java, ComponentImpl.java,

ComponentImplFactory.java, Connection.java, DirectPort.java,

ExecutionLoop.java, Global.java, InterruptHandler.java, InvocationMessageHandler.java,

Message.java, MessageDispatcher.java, MessageHandler.java, MessageHandlerCreator.java,

MessageQueue.java, MultiPort.java, Port.java, SameHandlerCreator.java,

Scheduler.java, SetupPort.java, SinglePort.java, StandardScheduler.java,

State.java, StreamAble.java, StupidScheduler.java,

SymbolTable.java,

ThreadedInterruptHandler.java

Compiler; Location: http://borg.rave.org/cgi-bin/seescoacvs/component/parser/*

Compiler; Files: Transformer.jjt, SimpleNode.java, and a large amount of parser node definitions. These start with Ast.*.

Controller; Location: http://borg.rave.org/cgi-bin/seescoacvs/testcases/scss/*

Controller; Files: Controller.component,

ConnectionProxy.component,

ContractGenerator.component, ReceivingMonitor.component,

SendingMonitor.component

Location: http://borg.rave.org/cgi-bin/seescoacvs/component/concurrency/*

Evaluator: PetriNetEvaluator.java,

PetriNode.java, StaticNet.java,

DynamicNet.java, ExecMessage.java, ExecutionTrigger.java

Places: Place.java, SinkPlace.java, SourcePlace.java

Transitions: Transition.java, InTransition.java,

ArcEntry.java, InputEntry.java,

MessageTransition.java, OutTransition.java, OutputEntry.java

Expressions: AgAddOperator.java,

AgAndOperator.java, AgCombinedExpression.java,

AgEqOperator.java, AgExpression.java, AgField.java,

AgForeach.java,

AgGlueOperator.java, AgGrOperator.java, AgIdentifierDescription.java,

AgIdxOperator.java, AgInteger.java, AgMessage.java,

AgMulOperator.java,

AgNotOperator.java, AgOperator.java, AgRange.java,

AgSet.java,

AgSubOperator.java, AgToken.java, AgVariable.java,

Applicable.java,

Bindings.java, EqualsExpression.java

Parser & Convertors: PetriNetParser.jj,

Printer.java, PetriNet2Prolog.java,

PetriNetConvertor.java, PetriNetSimplifier.java, PetriNetNormalizer.java

Location: http://borg.rave.org/cgi-bin/seescoacvs/component/concurrency/*

Using classifiers: Adaptor.component,

ClassifierCondition.java, ClassifierList.java, ClassifierMessage.java,

ClassifierMessageList.java, ClassifierRule.java,

ConcurrencyCase1.java, ConcurrencyCase2.java, Gene.java,

MessageArgument.java,

Scenario2_1.java, Scenario2_2.java

Component link: MessageDescription. java,

MessagePlace.java,

MyInvocationMessageHandler.java, MyInvocationHandler.java

The Pittsburgh approach: NeglectSynchro.component,

PetriNetGenerator.java,

PetriNetAdaptor.component, PetriNetLoader.component,

PetriNetCrossover.java,

PetriNetTimer.java, PetriNetCombiner.java, StandardLoader.component

The liveness module: FitnessAction.component,

LearningAdaptor.component,

ReinforcedAdaptor.component, GewogenPlace.java, GewogenTransition.java,

PtReceiver.java

The enforce action module: anal.pl, ForceAction.component, ForceAction1.component, ForceAction2.component, PetriNetBackTracker.java

The concurrency module: SynchroAdaptor.component

Location: http://borg.rave.org/cgi-bin/seescoacvs/component/concurrency/*

Shared Classes: ConcurrencyException.java,

ActorRoot.component, Pos.java,

ThreadCatcher.java

Server Components: PlayFieldView.java, PlayfieldCore.component, Square.java

Client Components: Actor*.component, BallActor*.component, FloodActor*.component, LineActor*.component, Actor*.l2net

IN THIS CHAPTER we have reported on the experiments we have performed. Our solution has changed over time from an initial scheme-representation of a concurrency adaptor, to a modularized reinforcement learning Petri-net adaptor. The path we have followed is a standard, 'prototype, experiment, observe, adjust' cycle in which mistakes are corrected in later experiments.

The experiments have allowed us to understand that creating a learning algorithm that will immediately create a concurrency adaptor is not possible, because the no-race requirement is very difficult to express and to validate.

We also learned that an off-line strategy offers certain advantages to verify the quality of the representation. We tried four different representations of an adaptor before a suitable one was found. These are, a scheme representation, a single message reactive classifier system, a multi message reactive classifier system and a Petri-net representation. Because the latter suited our needs, we stopped investigating which representation should be used.

However, afterward we turned our attention to the off-line/on-line problem. All the experiments initially conducted used an off-line strategy. However, from a pragmatic point of view, it is very unlikely that an off-line strategy can be made to work in an on-line environment. The main reason for this is that, once an off-line strategy becomes fixed in an on-line environment it might be unable to learn what to do in new situations, which have never been present in the off-line context. Therefore, we turned our attention to an on-line learning strategy, under the form of reinforcement learning. This turned out to be a very satisfactory approach.