I recently gave deep learning another go. This time I looked into pytorch. At least the thing lets you program in a synchronous fashion. One of the examples however did not work as expected.

I was looking into the superresolution example (https://github.com/pytorch/examples) and printed out the weights of the second convolution layer. It turned out these were ‘kinda weird’ (similar to attached picture). So I looked into them and found that the orthogonal weight initialization that was used would not initialize a large section of the weights of a 4 dimensional matrix. Yes, I know that the documentation stated that ‘dimensions beyond 2’ are flattened. Does not mean though that the values of a large portion of the matrix should be empty.

The orthogonal initialisation seems to have become a standard (for good reason. See the paper https://arxiv.org/pdf/1312.6120.pdf), yet is one that does not work together well with convolution layers, where a simple input->output matrix is not stratight away available. Better is to use the xavier_uniform initialisation. That is, in the file model.py you should have an initialize_weights as follows:

def _initialize_weights(self):

init.xavier_uniform(self.conv1.weight, init.calculate_gain('relu'))

init.xavier_uniform(self.conv2.weight, init.calculate_gain('relu'))

init.xavier_uniform(self.conv3.weight, init.calculate_gain('relu'))

init.xavier_uniform(self.conv4.weight)

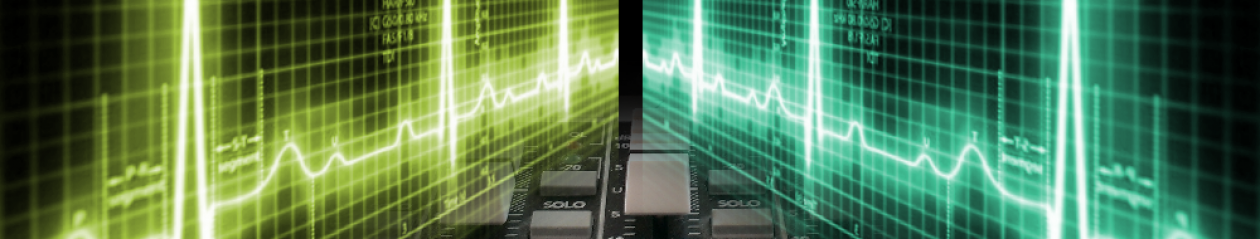

With this, I trained a model on the BSDS300 dataset (for 256 epochs) and then tried to upsample a small image by a factor 2. The upper image is the small image (upsampled using a bicubic filter). The bottom one is the small picture upsampled using the neural net.

The weights we now get at least use the full matrix.

The output when initialized with “orthogonal” weights has some sharp ugly edges: