I apologize at the start of this post. I never wanted to sound as someone who wants to document his snoring. Anyway, I do so because I feel I have some important things to share. I will try to stick to some facts that might help you without going too much in my personal situation.

Since half a year or so I try to get my snoring under control. To that end I got a Resmed Airsense 10 from docters/insurers together with a nasal mask.

Nostrils

The first big obstacle was learning to sleep with my mouth closed. Not much to do but to actually do it. This took some weeks.

Then getting up to speed was problematic because my nostrils would be more closed than open during the night. That lead to painful lungs and a not so optimal ‘therapy’. Two things were necessary to resolve this

a- got rid of the airfilter in the machine. The airfilter that was installed would actually pollute the air coming in (it hadn’t been changed in at least 6 months and the provider didn’t feel in a hurry to change it)

b- started using the humidifier at position 4. Every morning I would take it out of the machine and leave it open during the day. That would allow the water to breath. Once in a while I would replace it completely.

With those two tricks I got my nostrils somewhat under control.

Lack of oxygen

Although the headaches during the days vanished immediately after starting to use the machine, I now got symptoms of someone lacking oxygen. I felt really tired in the afternoon. Talking to my doctor did not help very much. He suggested that I could not be lacking oxygen because there was a positive pressure at the inlet.

It took me a couple of months to realize that he was wrong. To understand that just imagine the mask with no breathing holes. If you exhale air you will fill up the long tube, the humidifier and the rest of the machine with used air. The next breath you take will be first the old air, then the new. Now imagine, only 1 or two holes. The machine will be able to generate the necessary pressure, still no real air exchange will take place.

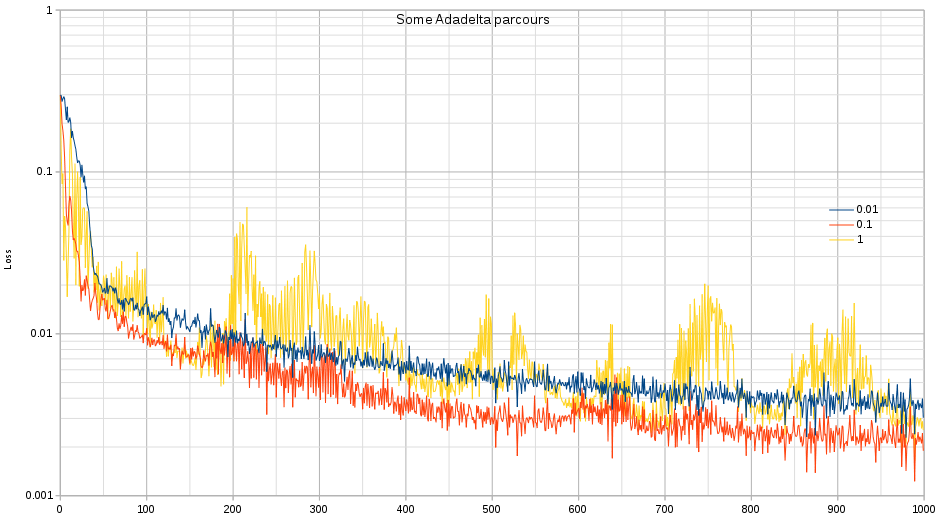

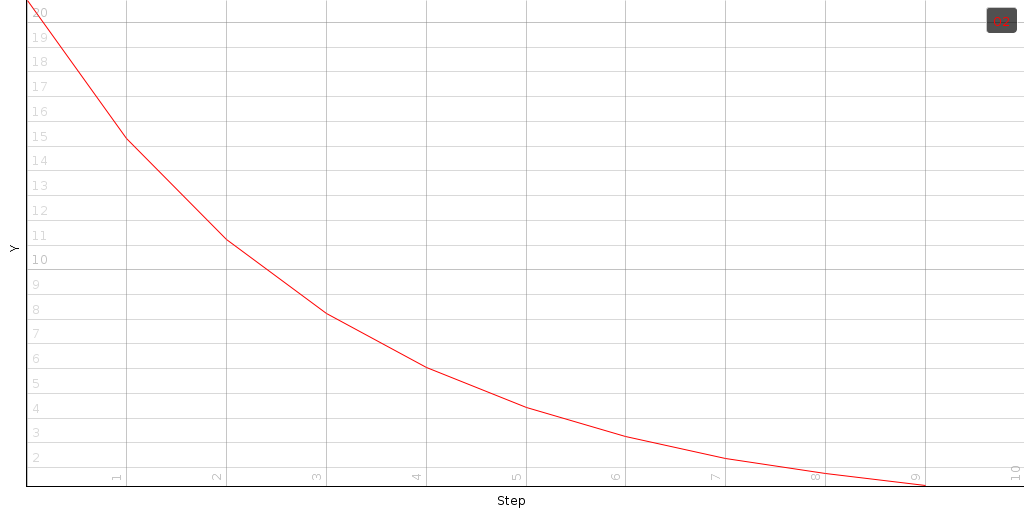

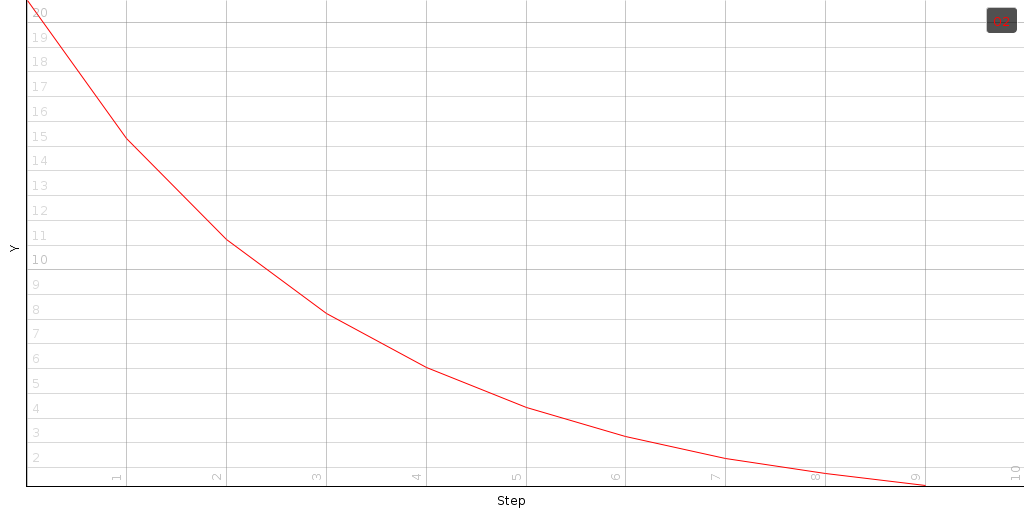

To analyze this further I set up a simulation in which the patient would inhale all the air he just exhaled. Here is what happens to the oxygen level then:

The above plots shows the initial oxygen concentration at about 21%. Each breath removes 1/4th of the oxygen, leaving you after 4 breaths with only 1/3th of the necessary oxygen ! That is quiet staggering.

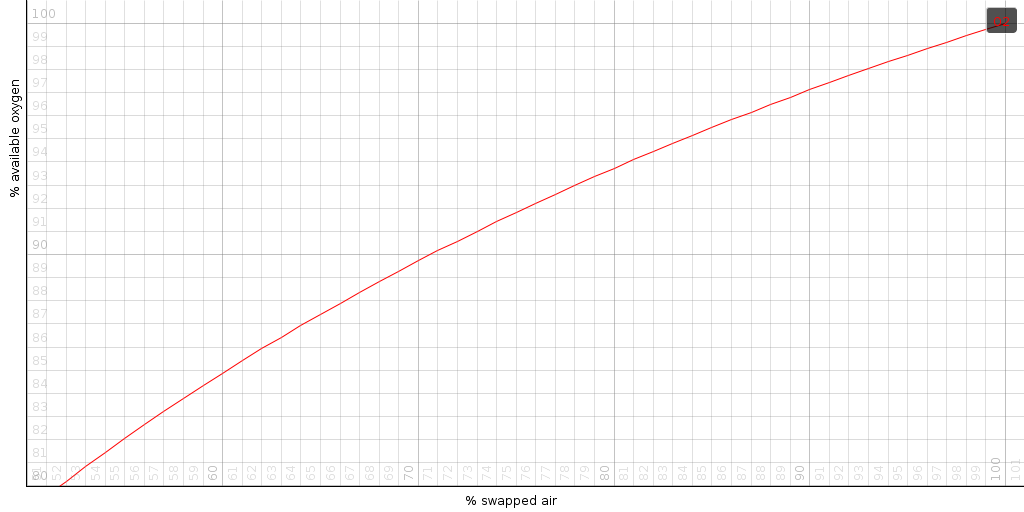

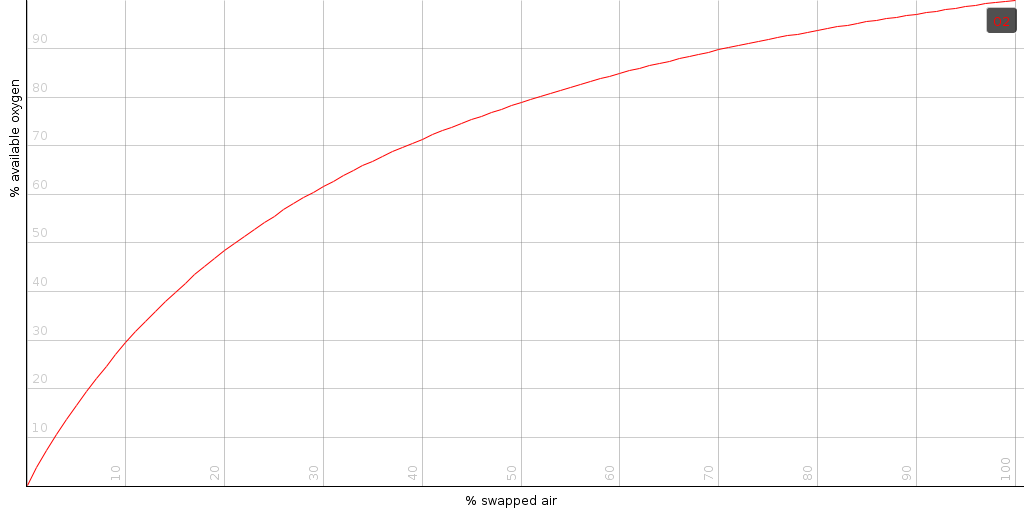

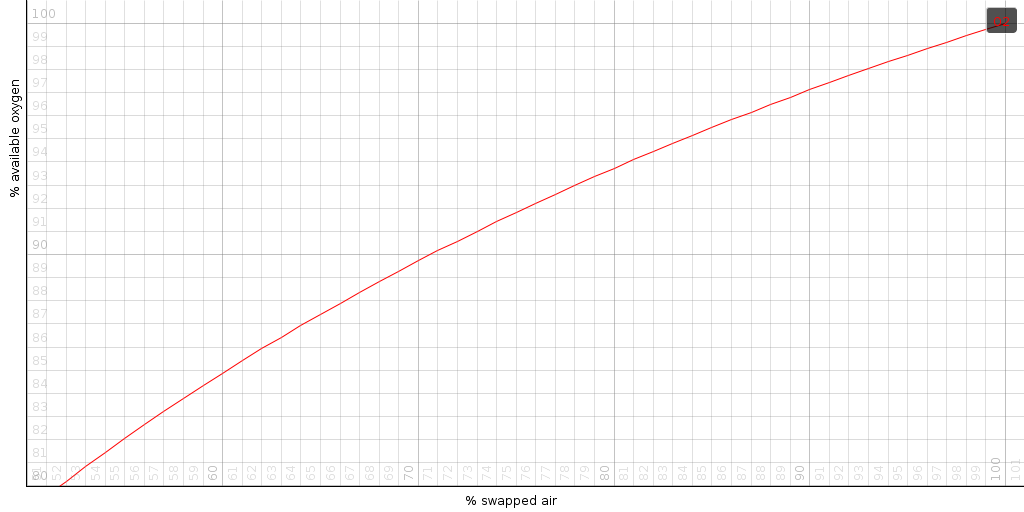

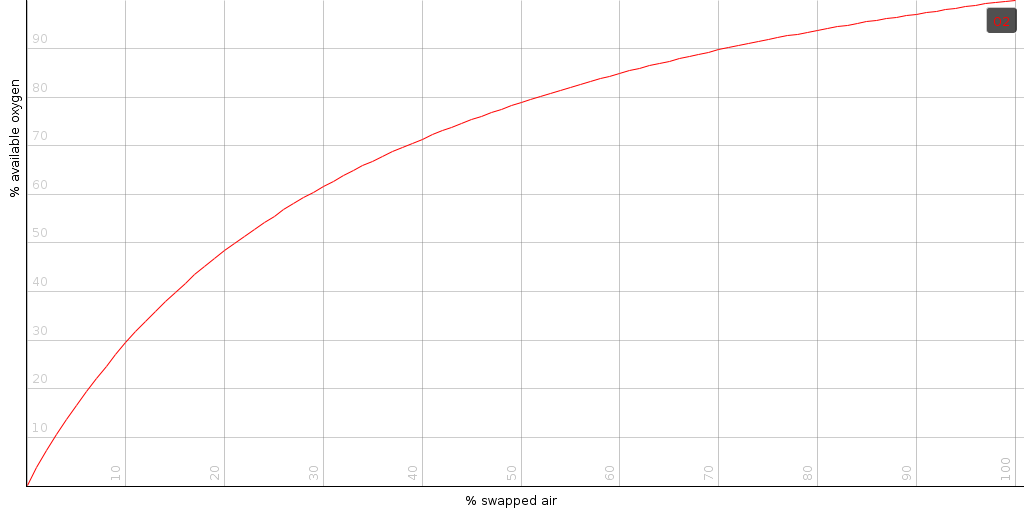

Of course, the resmed machines do not have closed holes. The positive pressure replaces some of the old air with new air. The question is now: how much ? This can be expressed as a percentage of the air that is swapped’ per breath cycle. For each percentage, we can calculate the amount of oxygen (compared to normal air) that would be available to you during the night.

From the above plot we can see that if the machine is able to swap out 80% of the air (during one breath-cycle), you will have 93% of normal-air-oxygen. That is 7% less than you need.

Clearly we had found the culprit. I must have had an air exchange percentage that was sufficiently low, leading to a low oxygen availability. The question now was: what to do about it ?

Solution #1: turn of the ‘autoset’ feature of the resmed machine or increase its minimum pressure substantially – Initially, my machine was set in autoset mode, which means that it would try to determine the best setting for your situation automatically. It will navigate itself between the minimum and maximum boundaries to minimize the number of blockages. Of course, that minimum might lower the average pressure that sends air out of the mask. Thus: it might be that, although it might minimize the apnoes, it no longer vents properly. An easy solution to solve that is to use a continuous positive pressure, or to increase the minimum pressure. That there is some truth to this can be read from online reports of people who went from a normal CPAP mask (resmed 8) to an automatic settings mask (resmed 10) and complained that they felt worse with this new machine.

How does the mathematics look ? Generally, air pressure through a hole can be modeled as the square root of the pressure difference divided by the air density. (ignoring friction and so on). We thus have sqrt(0.016666 P) describing the air speed velocity through the holes. Thus if we raise the minimum pressure by a factor x, we will push out around sqrt(x) more air.

Solution #2: turn off Exhalation Pressure Release (The EPR setting) – online you often read that people sleep easier when the machine allows you to exhale easier (it does that by lowering the pressure when you exhale), yet in doing so, it drastically reduce the amount of air swapped out.

Solution #3: use a larger mask. Try to close the main hole of the mask and exhale through the little holes. Measure how long it takes to exhale and compare that against a normal exhalation. If you cannot generate enough pressure to get all the air out within one breath cycle, then your mask is too small. This problem can be solved by using a larger mask